Influence Dynamics and Stagewise Data Attribution

October 14, 2025 | Lee et al.

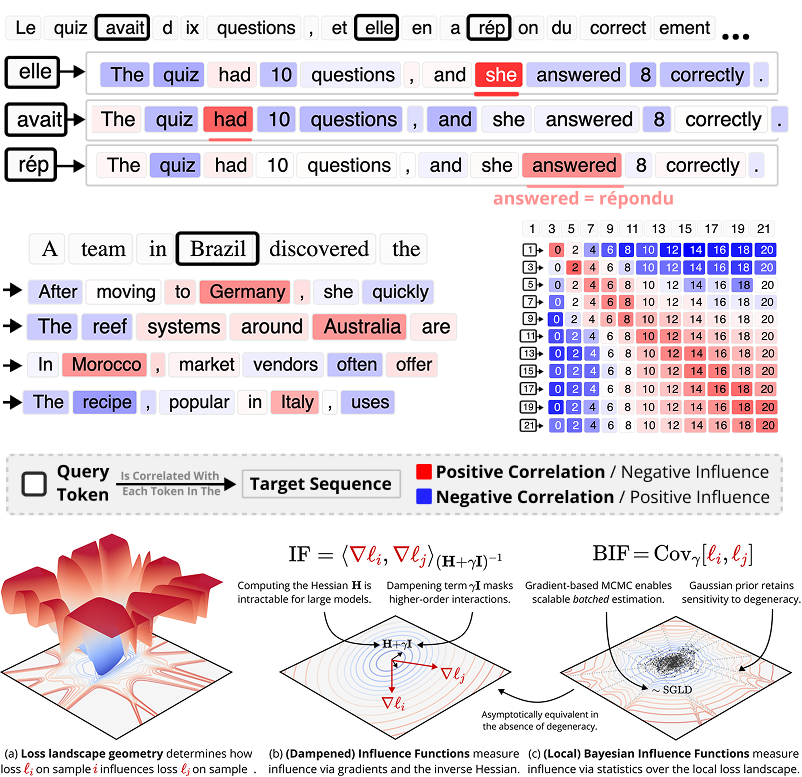

Current training data attribution (TDA) methods treat the influence one sample has on another as sta...

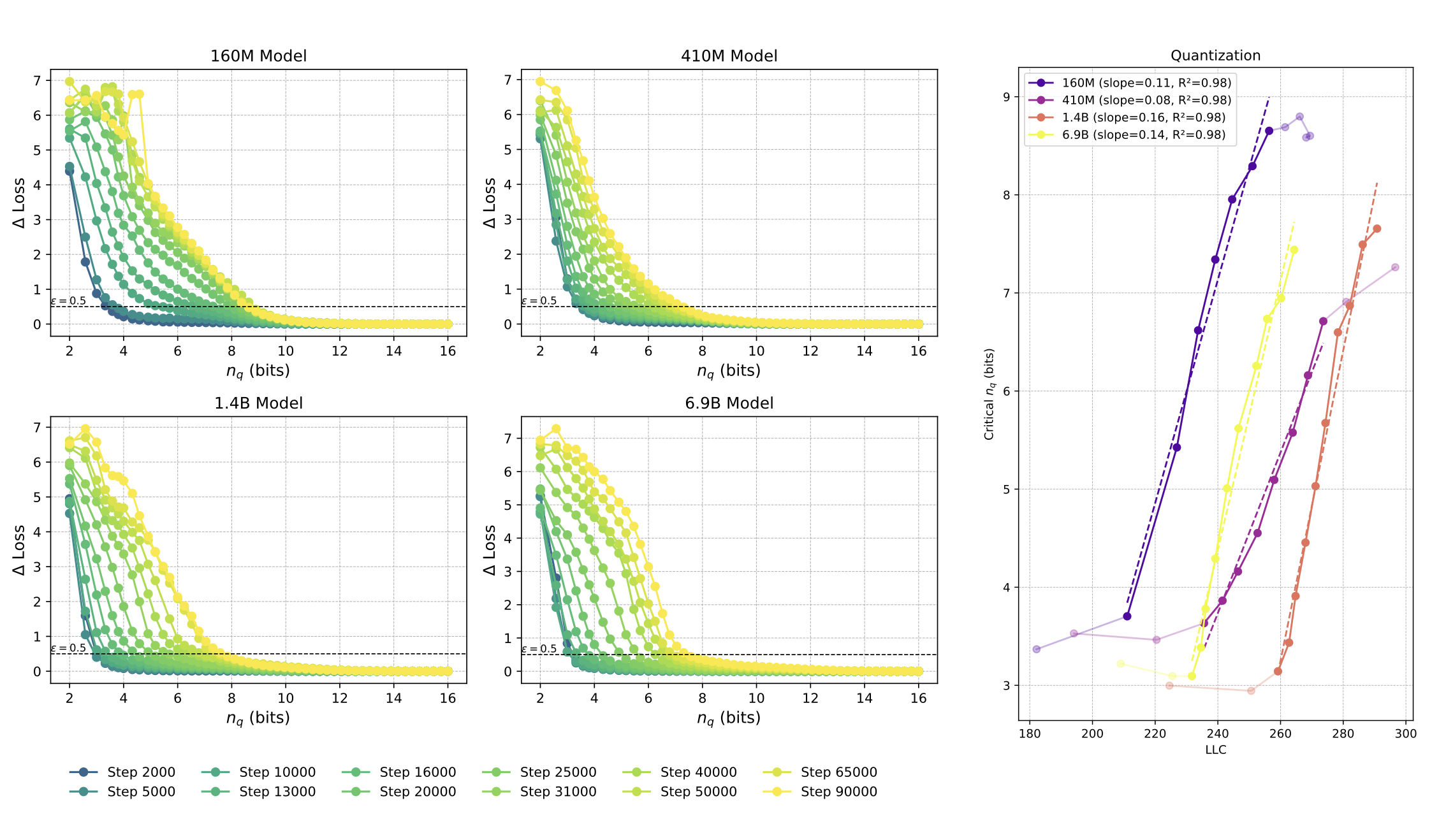

Compressibility Measures Complexity: Minimum Description Length Meets Singular Learning Theory

October 14, 2025 | Urdshals et al.

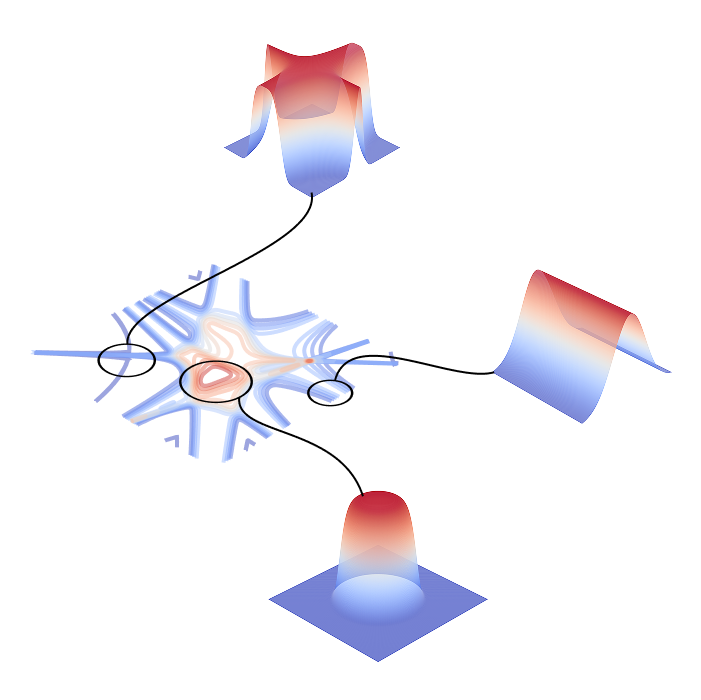

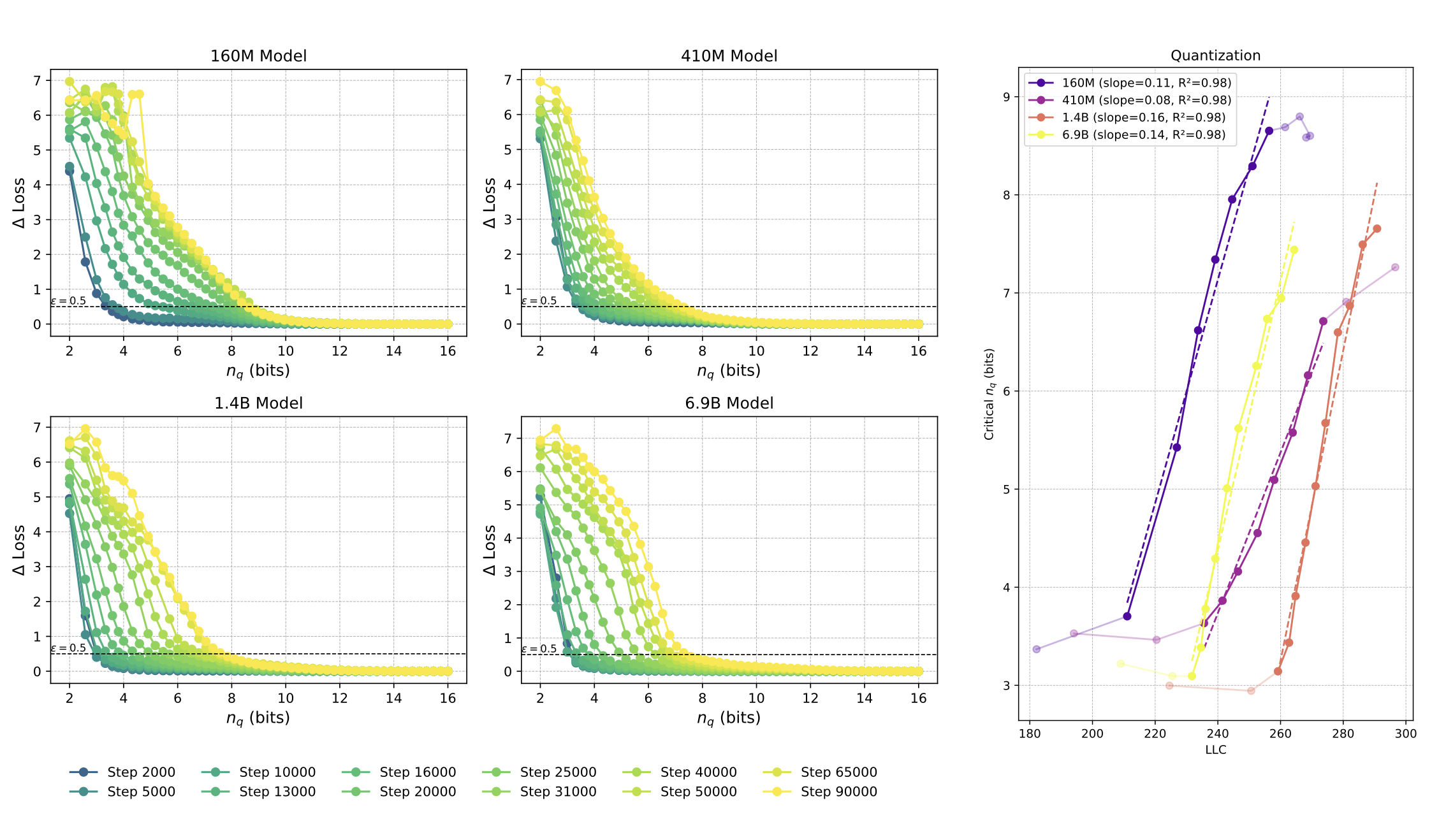

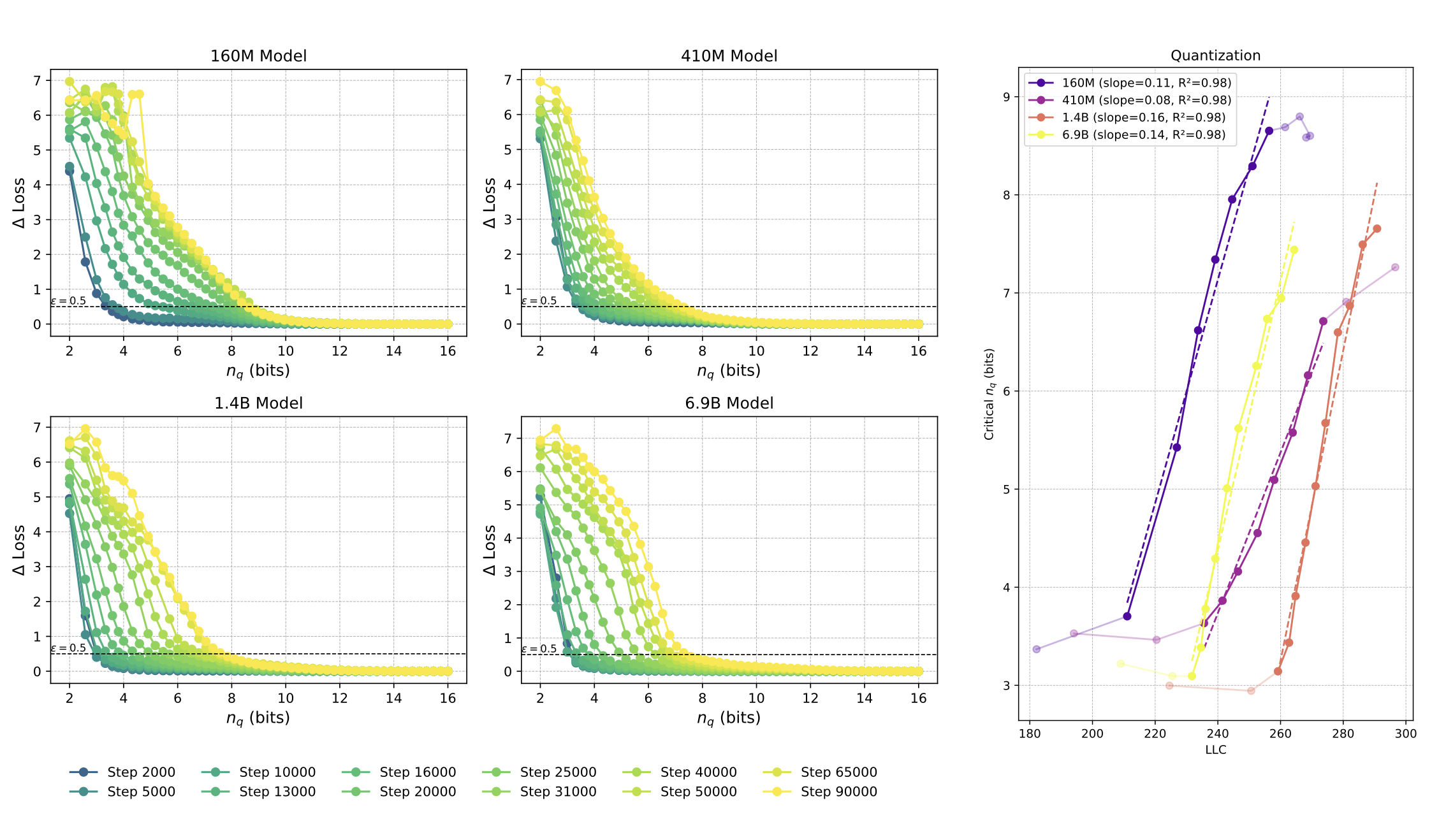

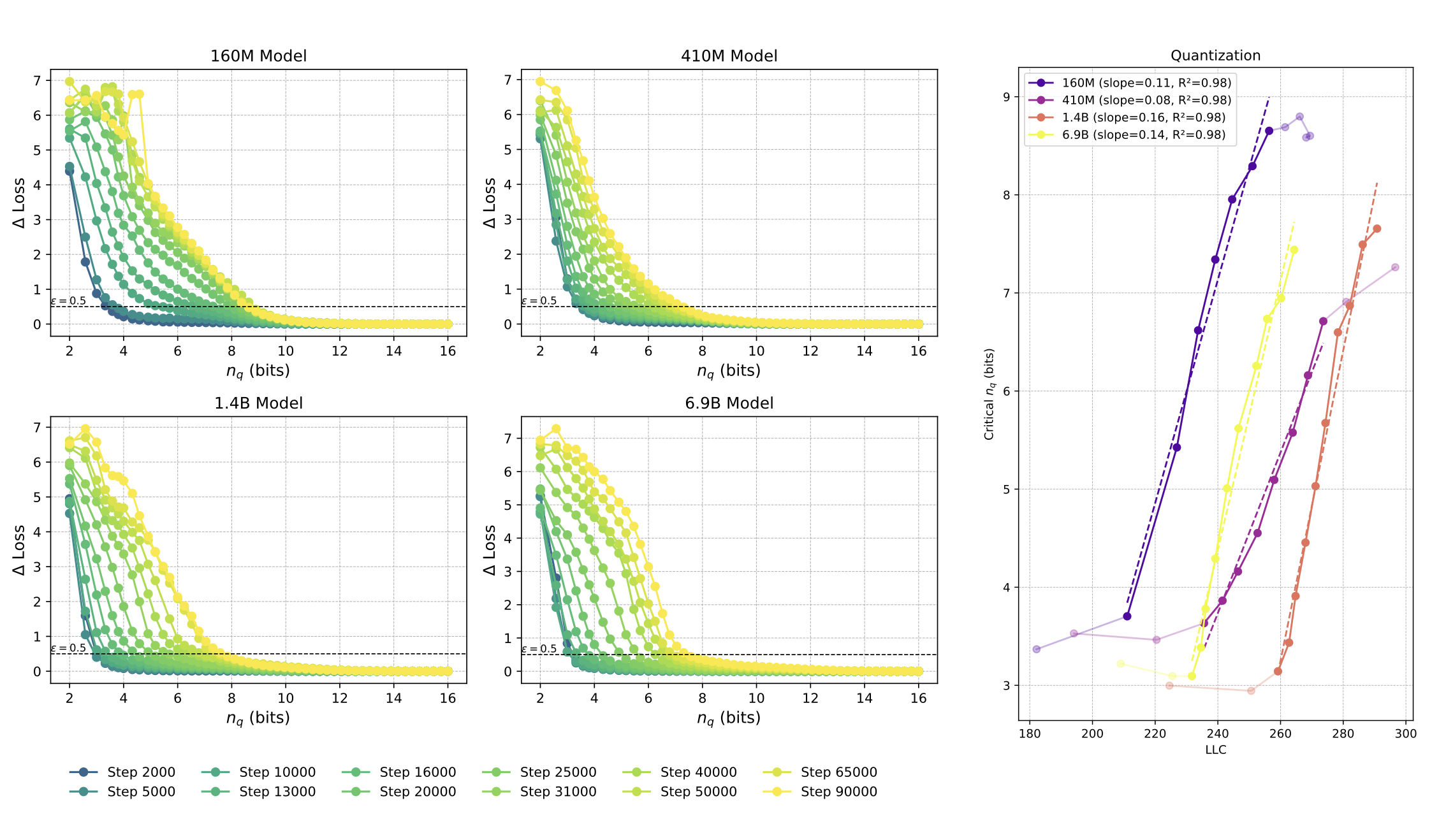

We study neural network compressibility by using singular learning theory to extend the minimum desc...

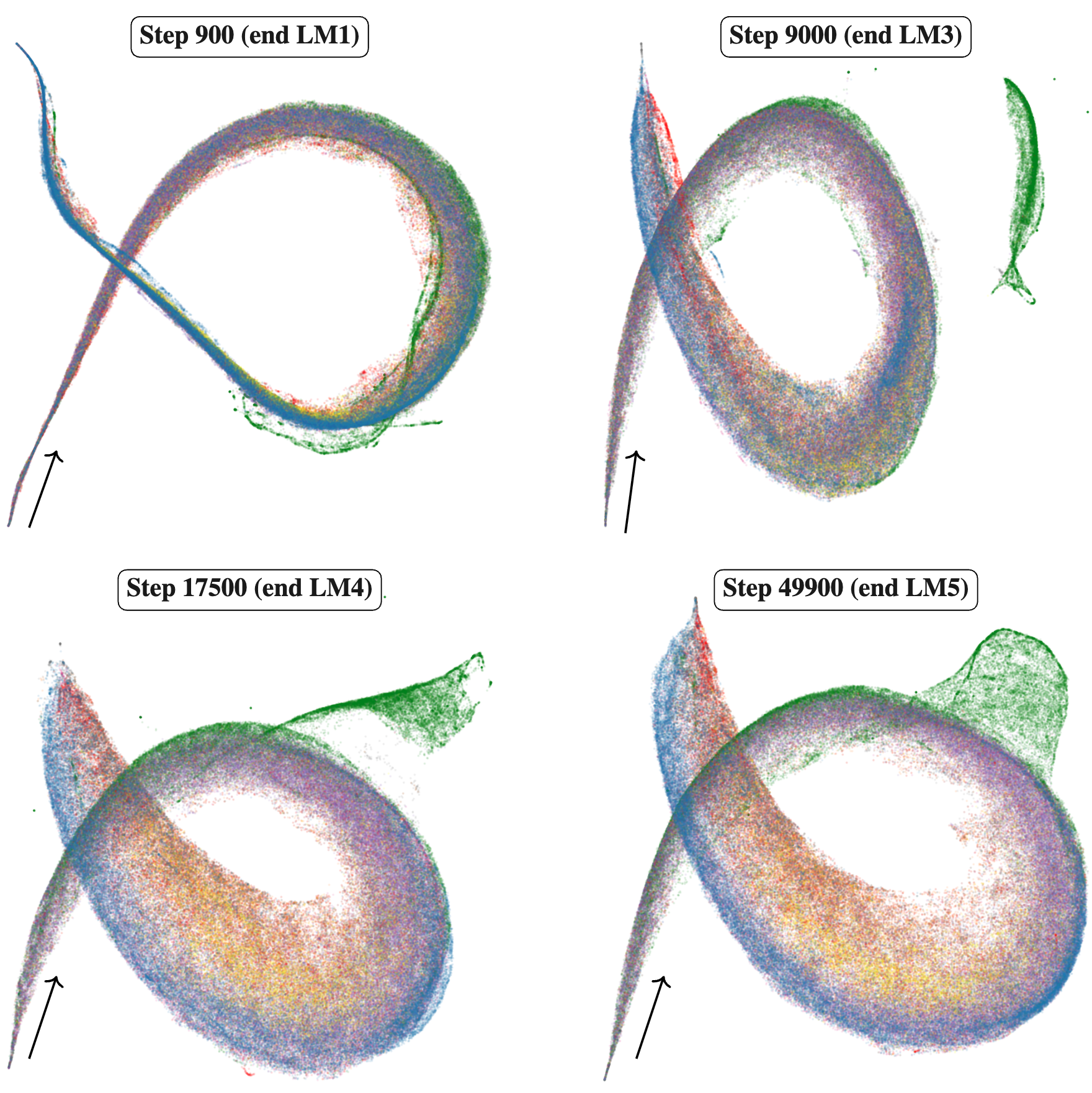

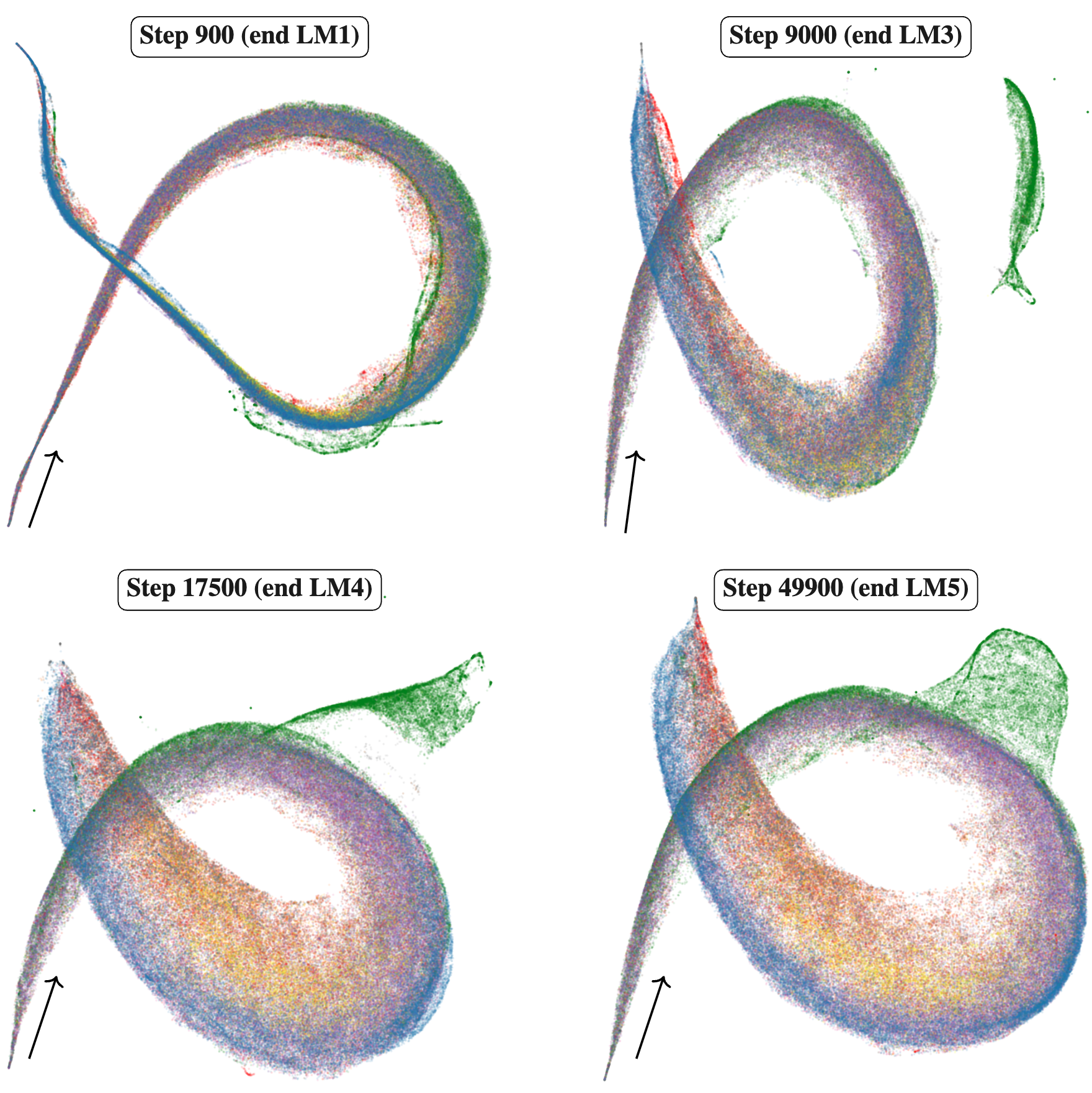

Embryology of a Language Model

August 1, 2025 | Wang et al.

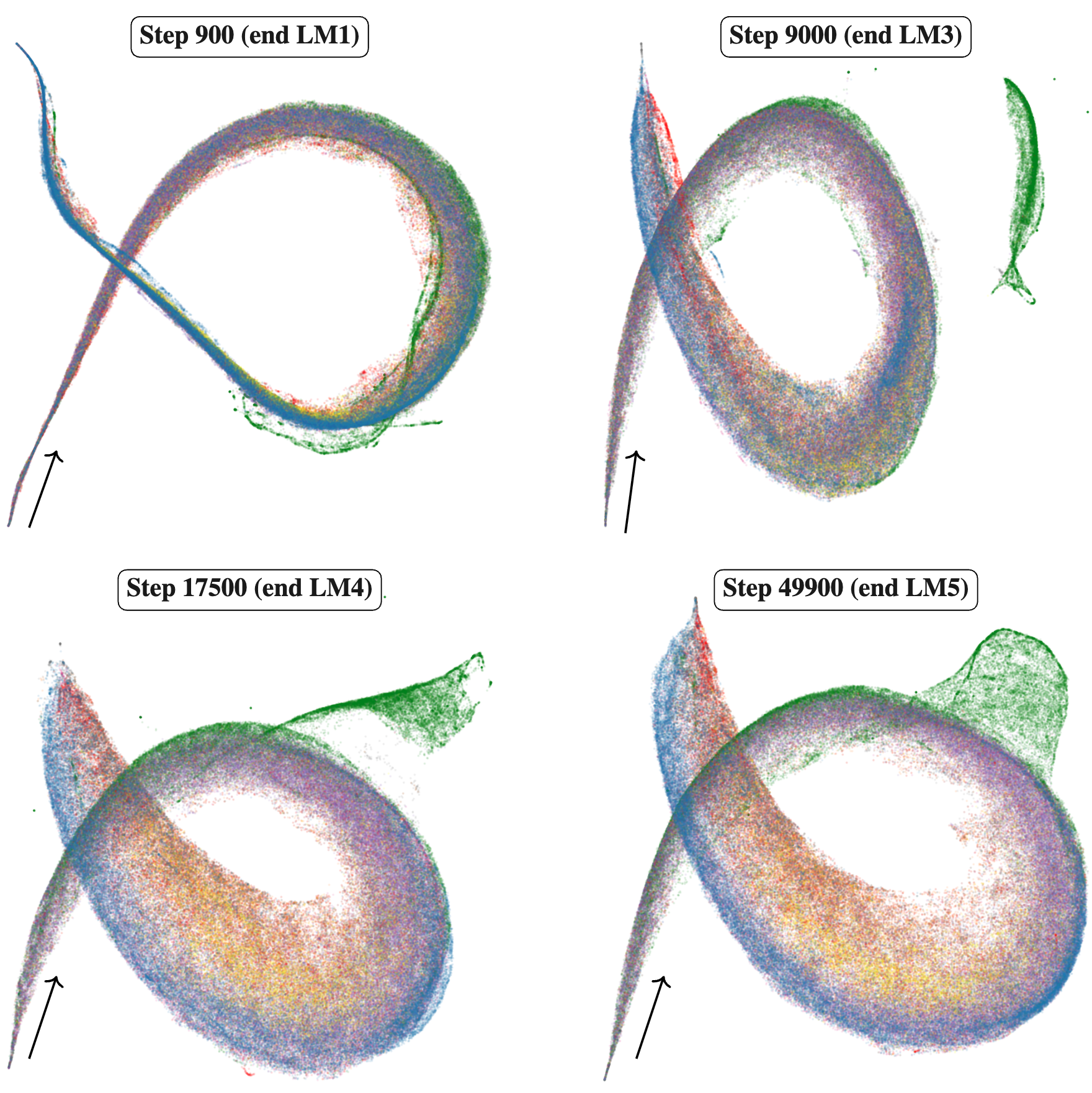

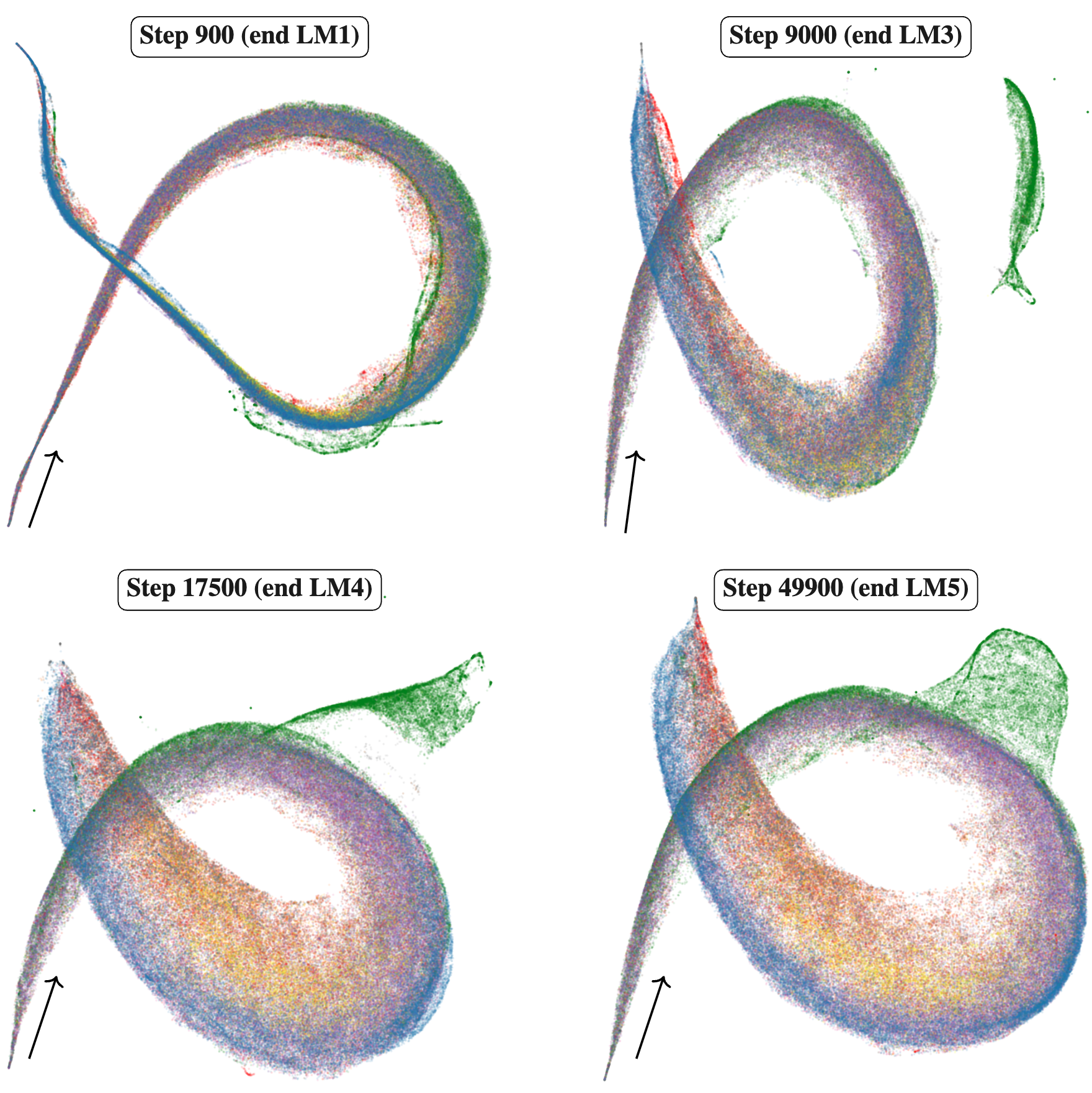

Understanding how language models develop their internal computational structure is a central proble...

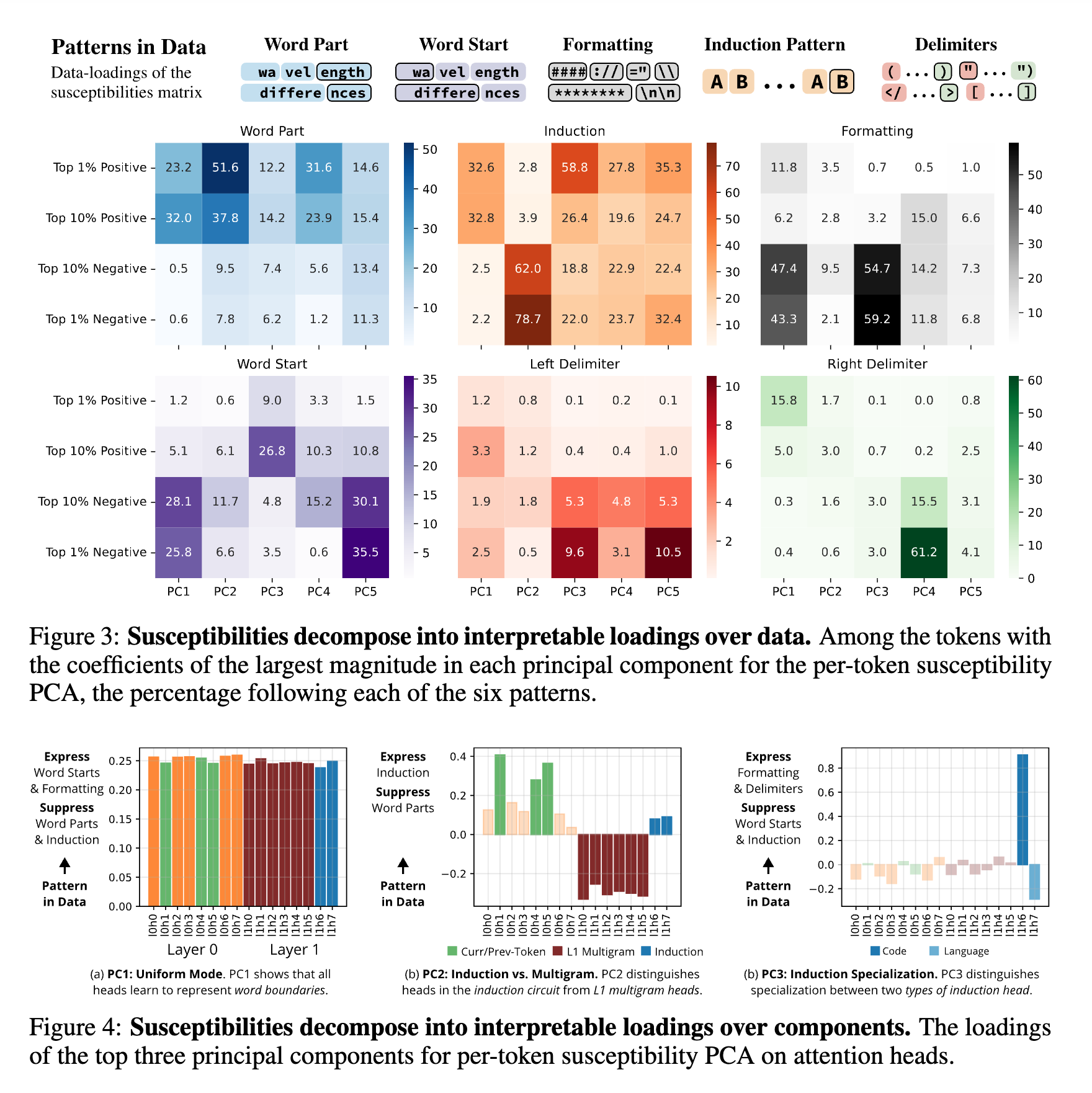

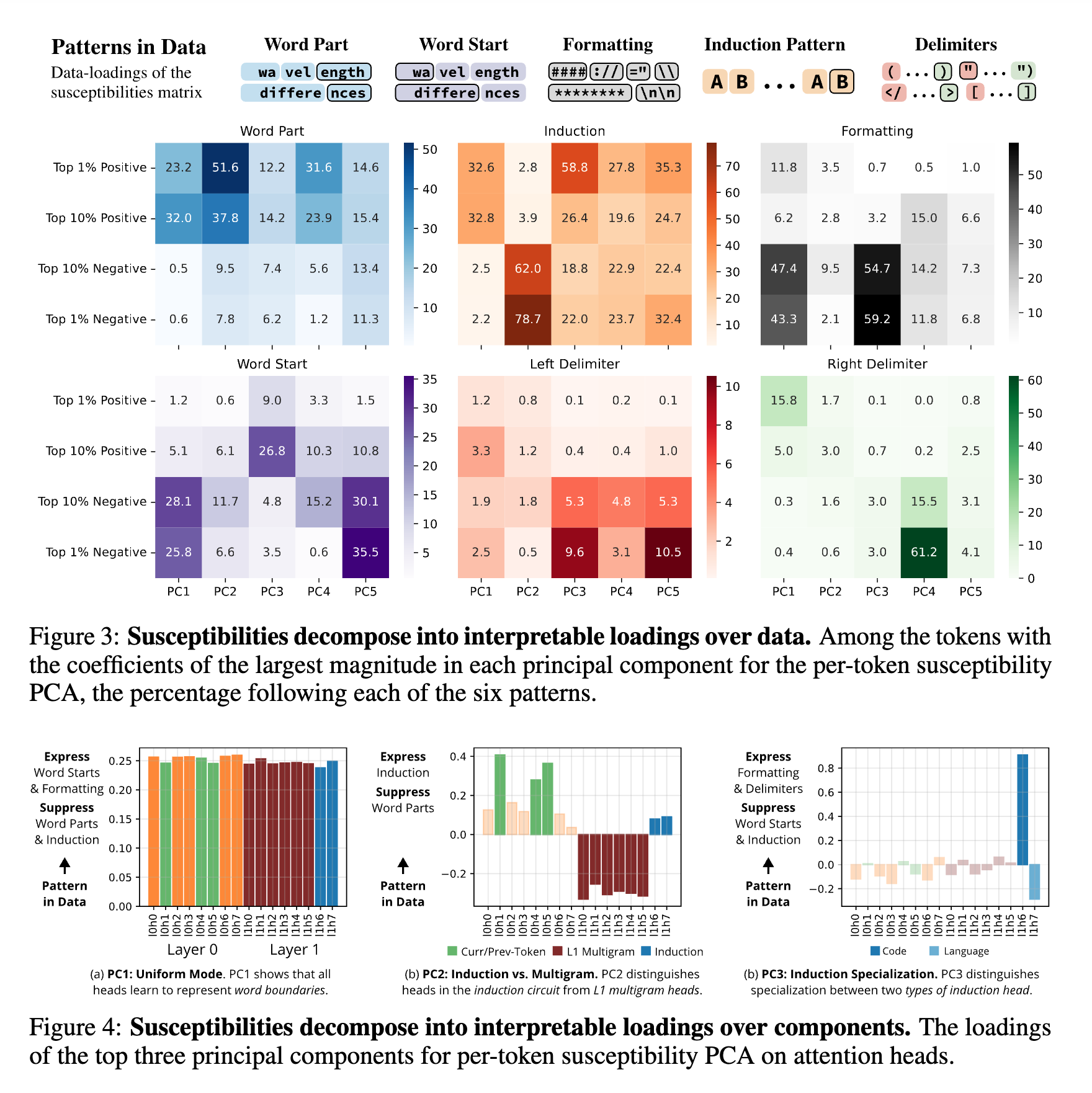

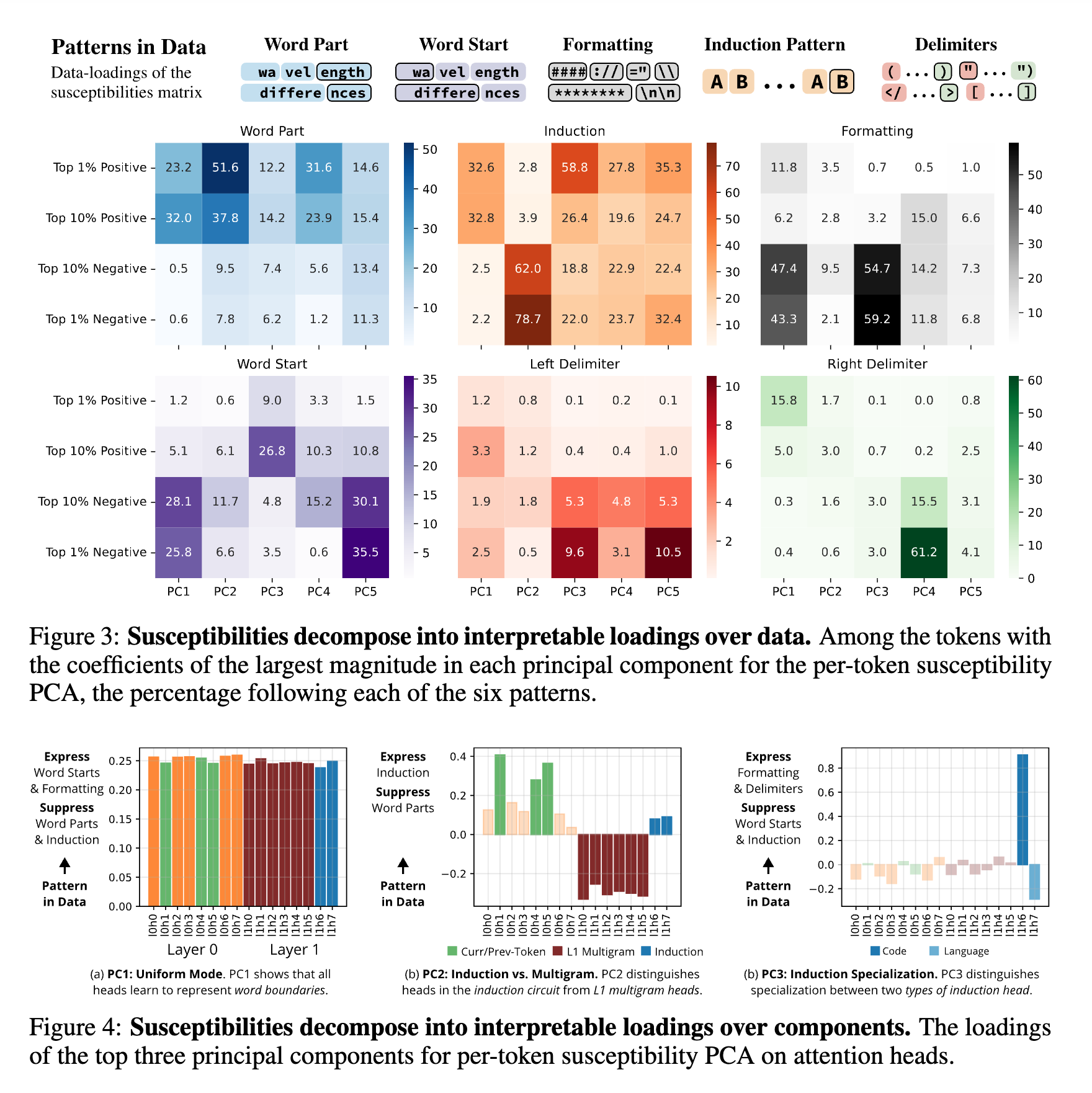

Structural Inference: Interpreting Small Language Models with Susceptibilities

April 25, 2025 | Baker et al.

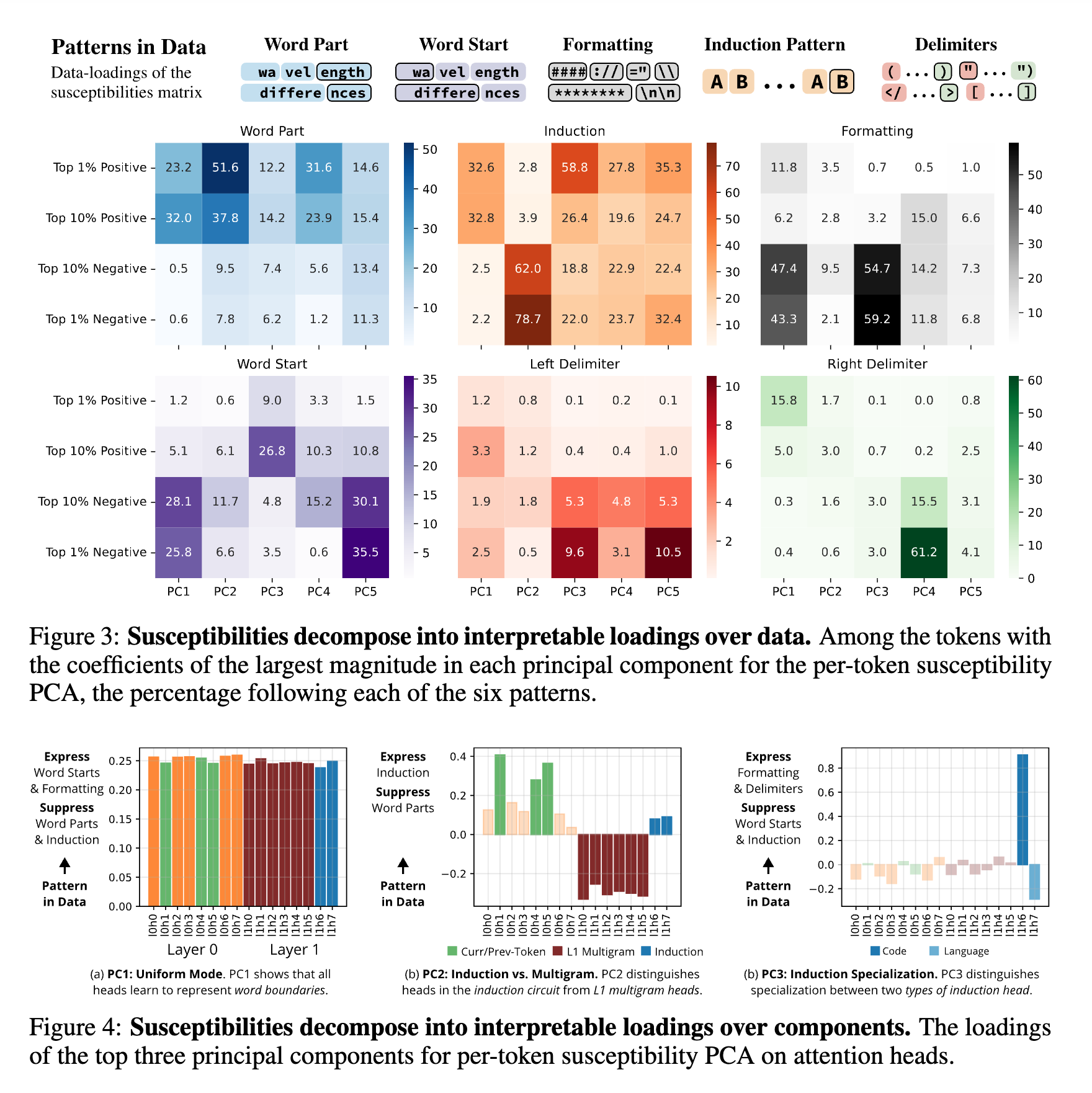

We develop a linear response framework for interpretability that treats a neural network as a Bayesi...

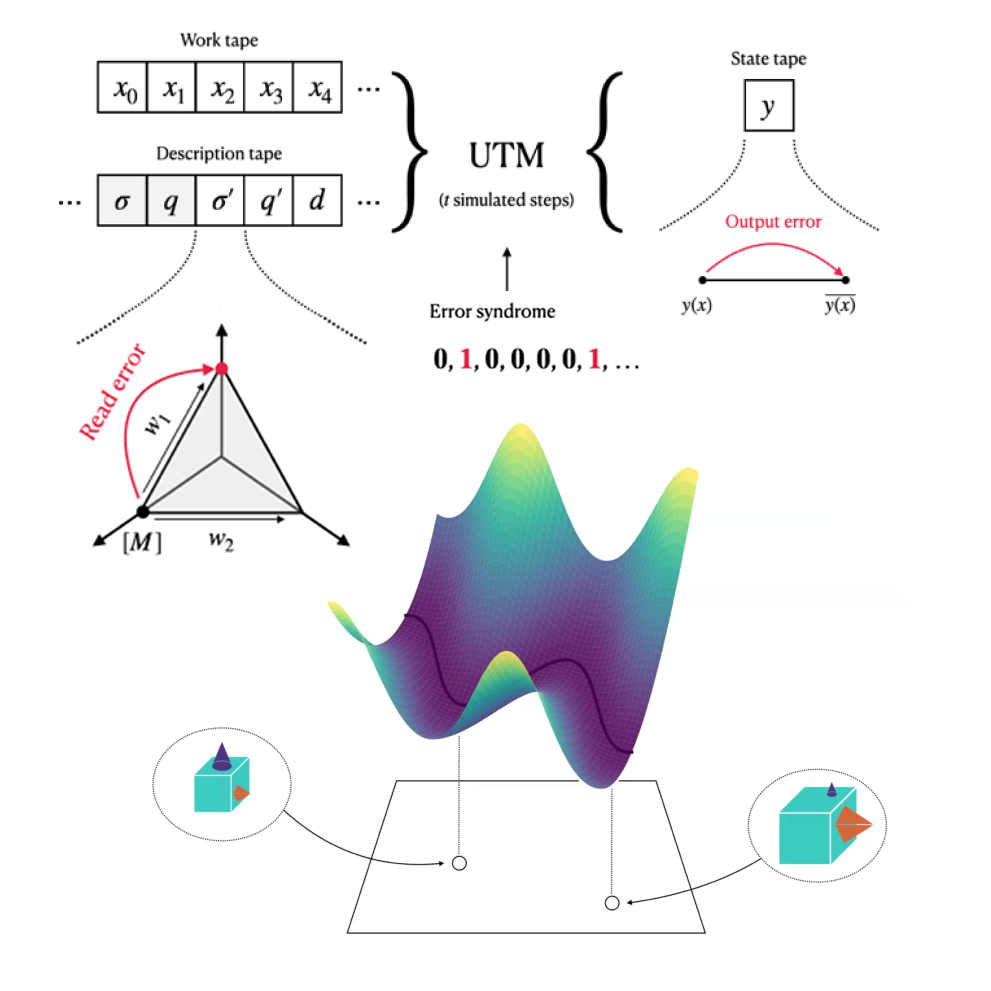

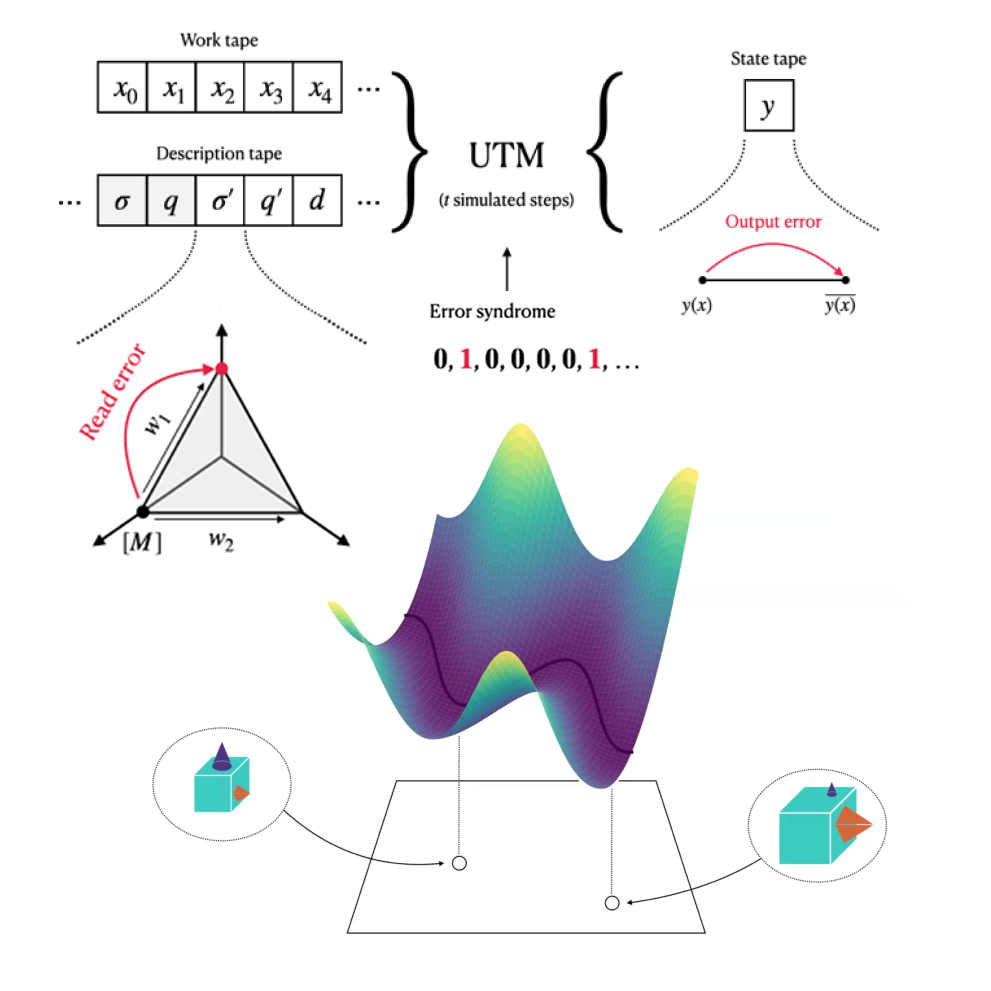

Programs as Singularities

April 10, 2025 | Murfet and Troiani

We develop a correspondence between the structure of Turing machines and the structure of singularit...

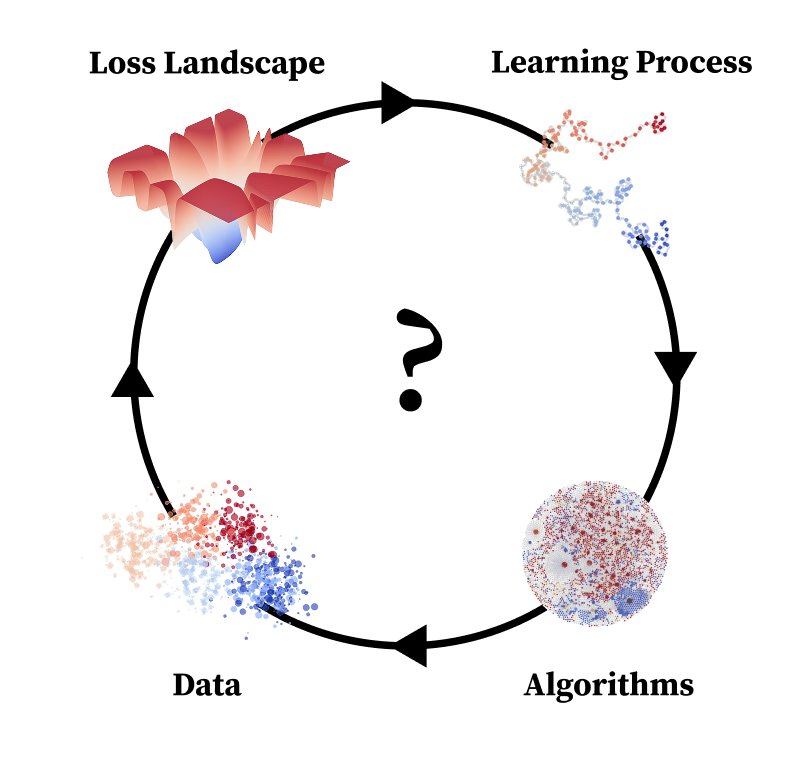

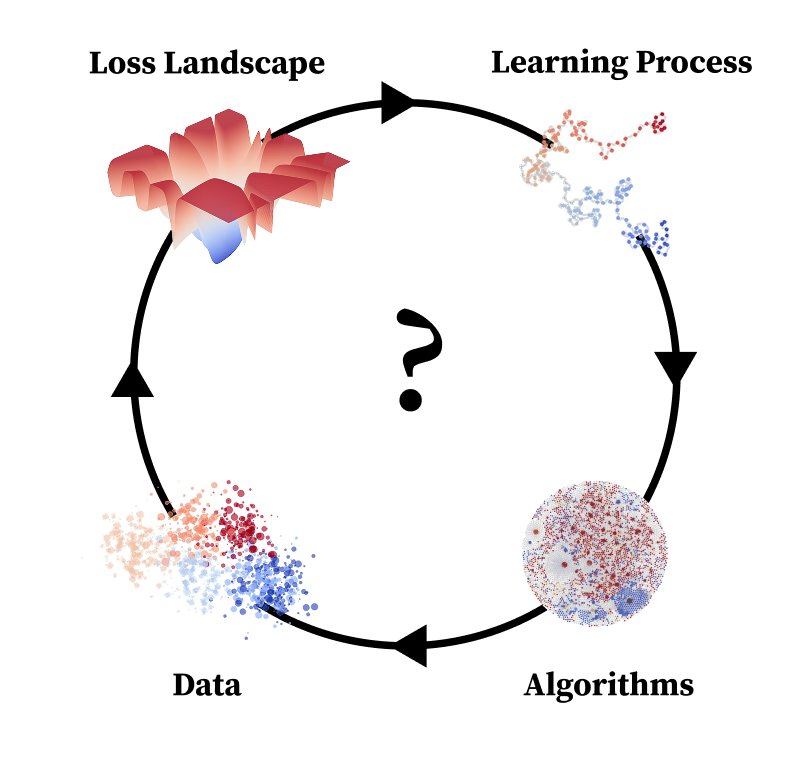

You Are What You Eat – AI Alignment Requires Understanding How Data Shapes Structure and Generalisation

February 8, 2025 | Lehalleur et al.

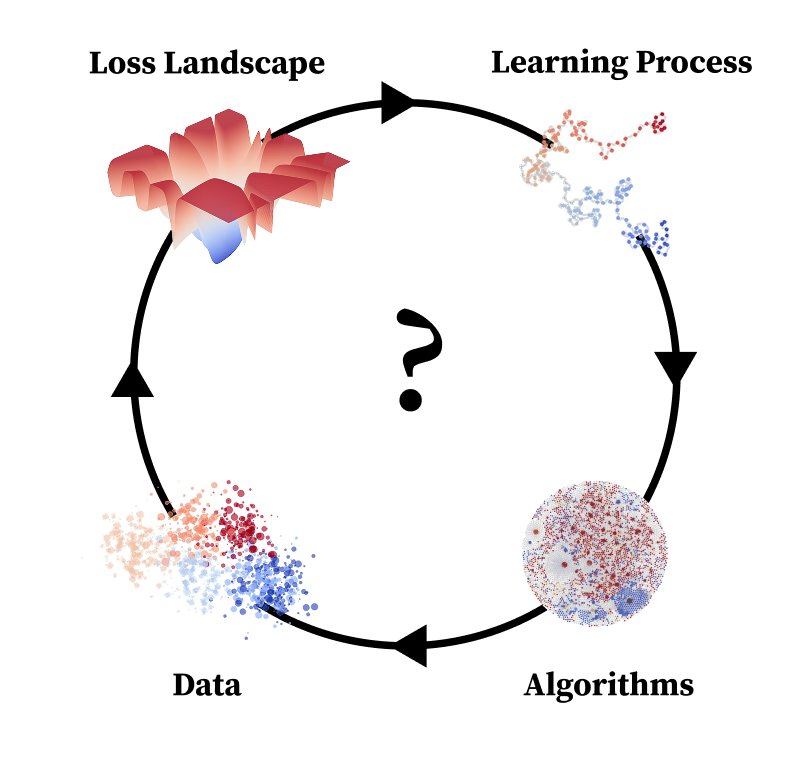

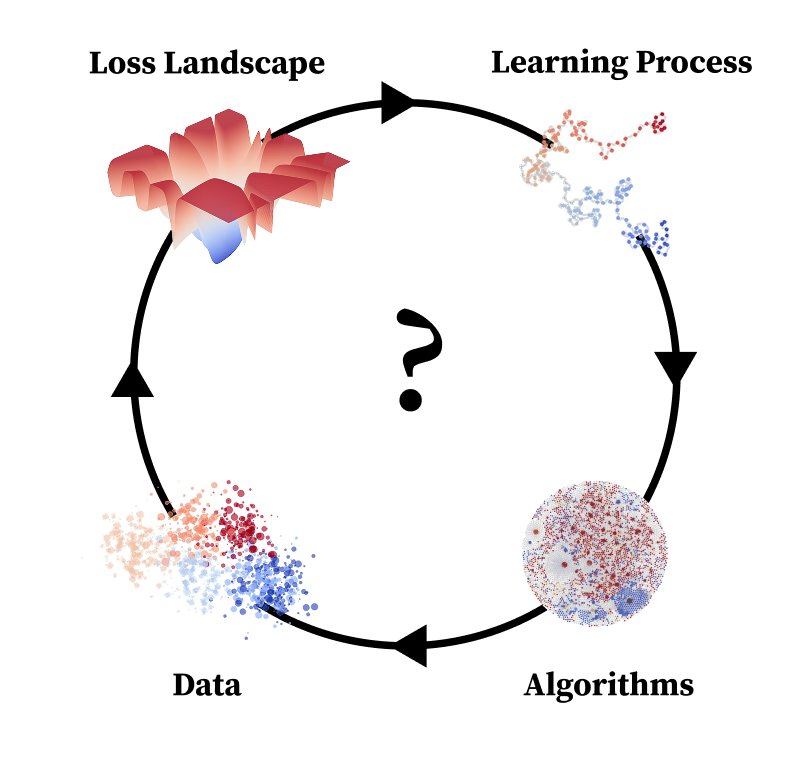

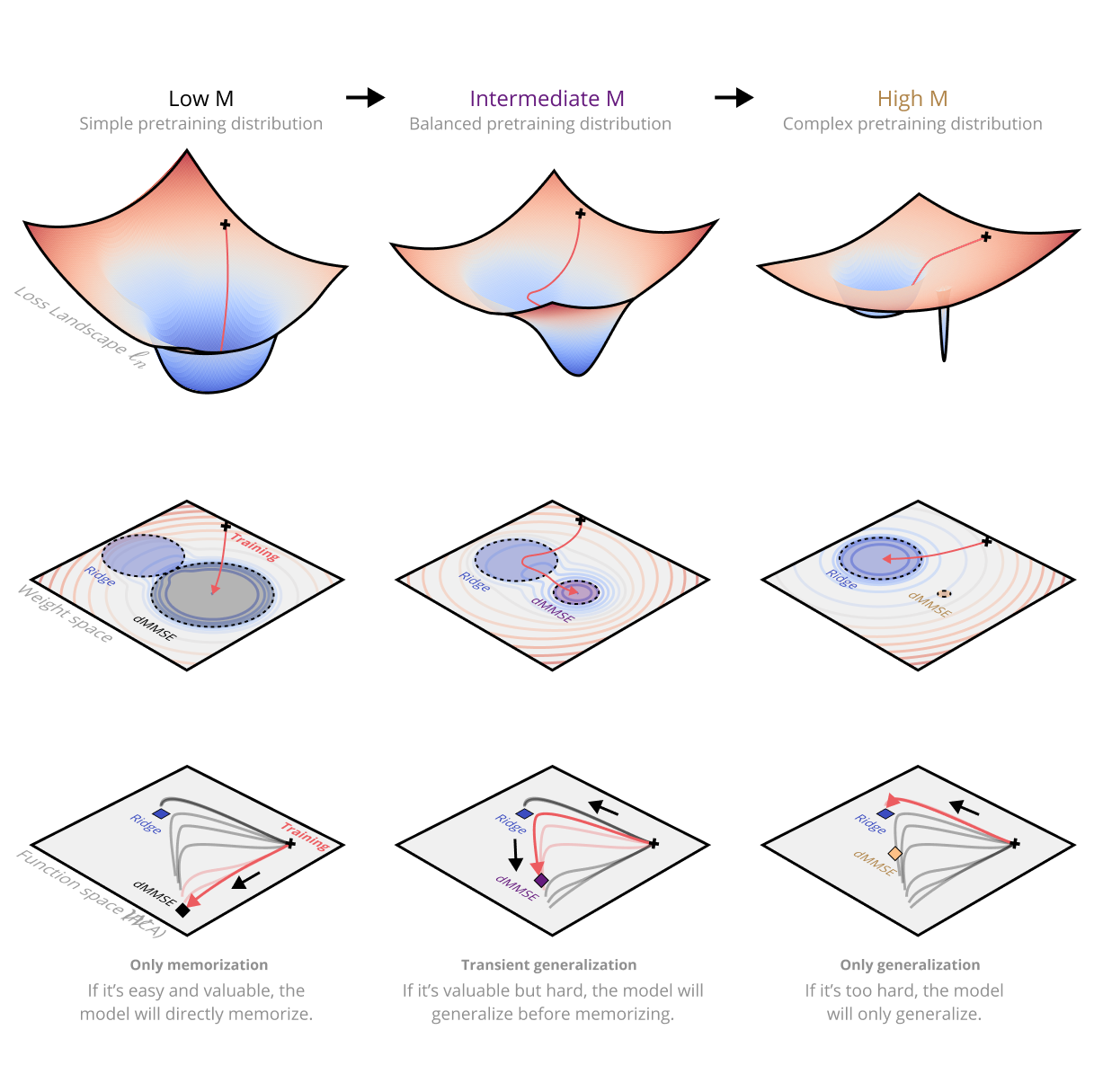

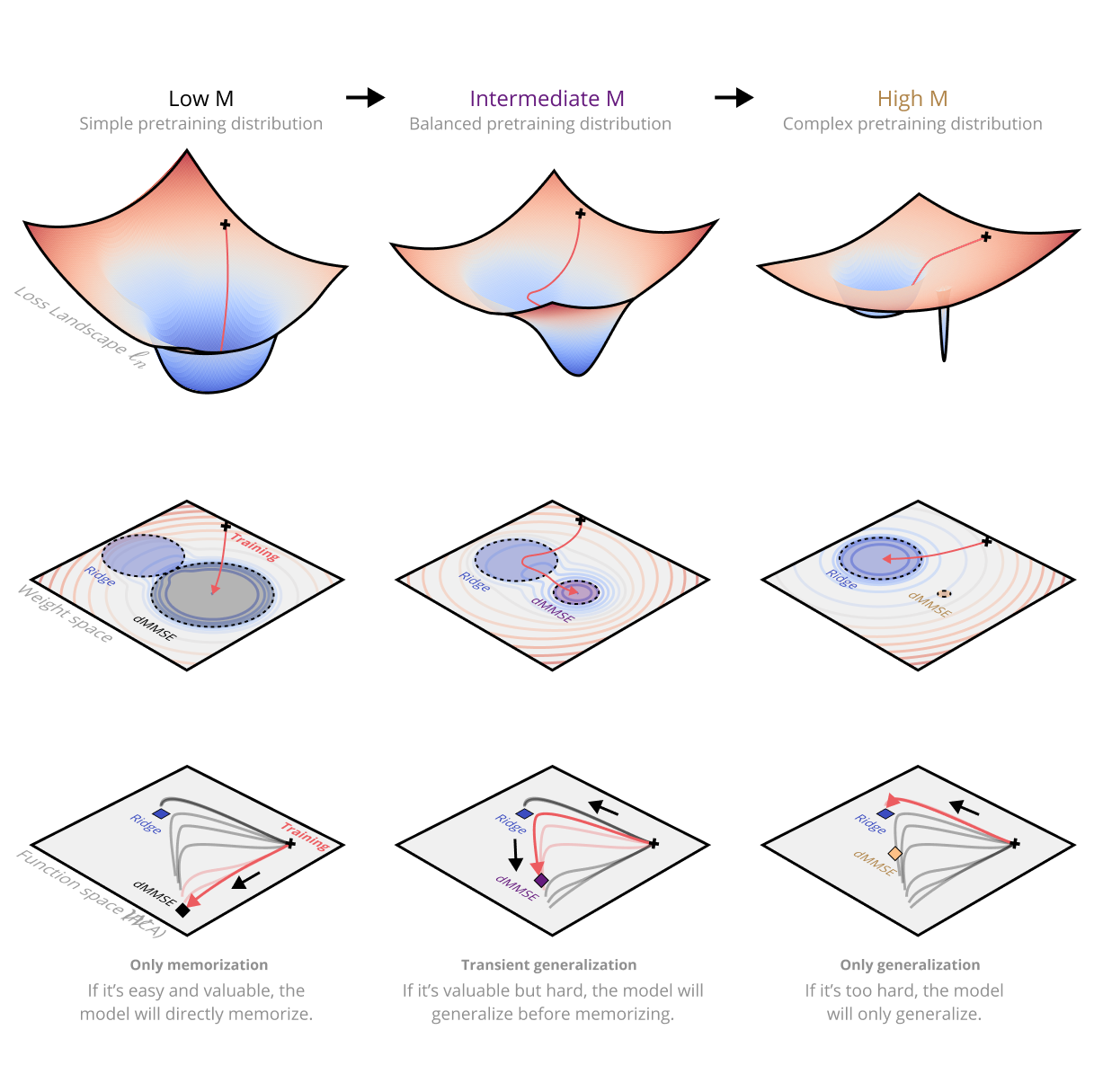

In this position paper, we argue that understanding the relation between structure in the data distr...

Dynamics of Transient Structure in In-Context Linear Regression Transformers

January 29, 2025 | Carroll et al.

Modern deep neural networks display striking examples of rich internal computational structure.

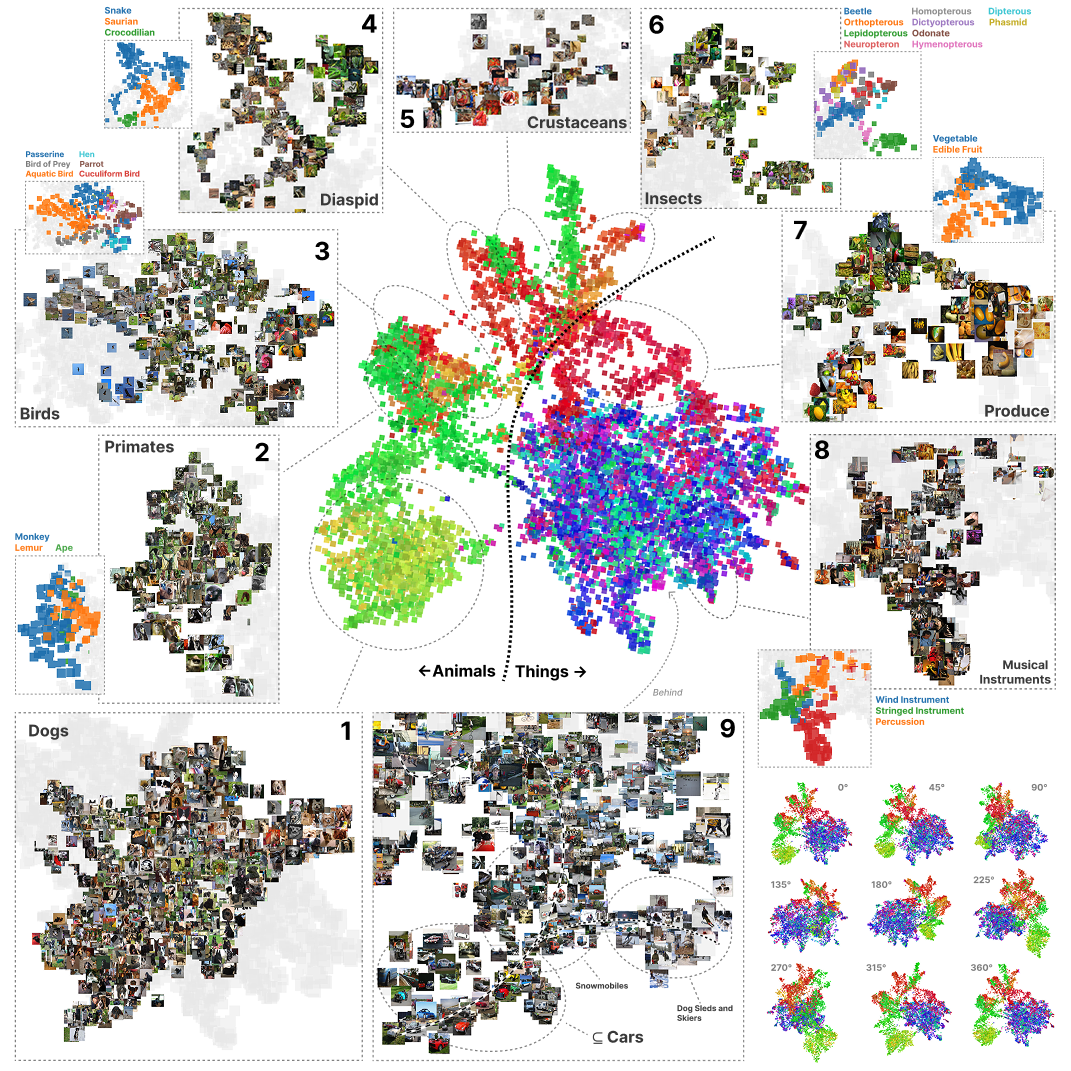

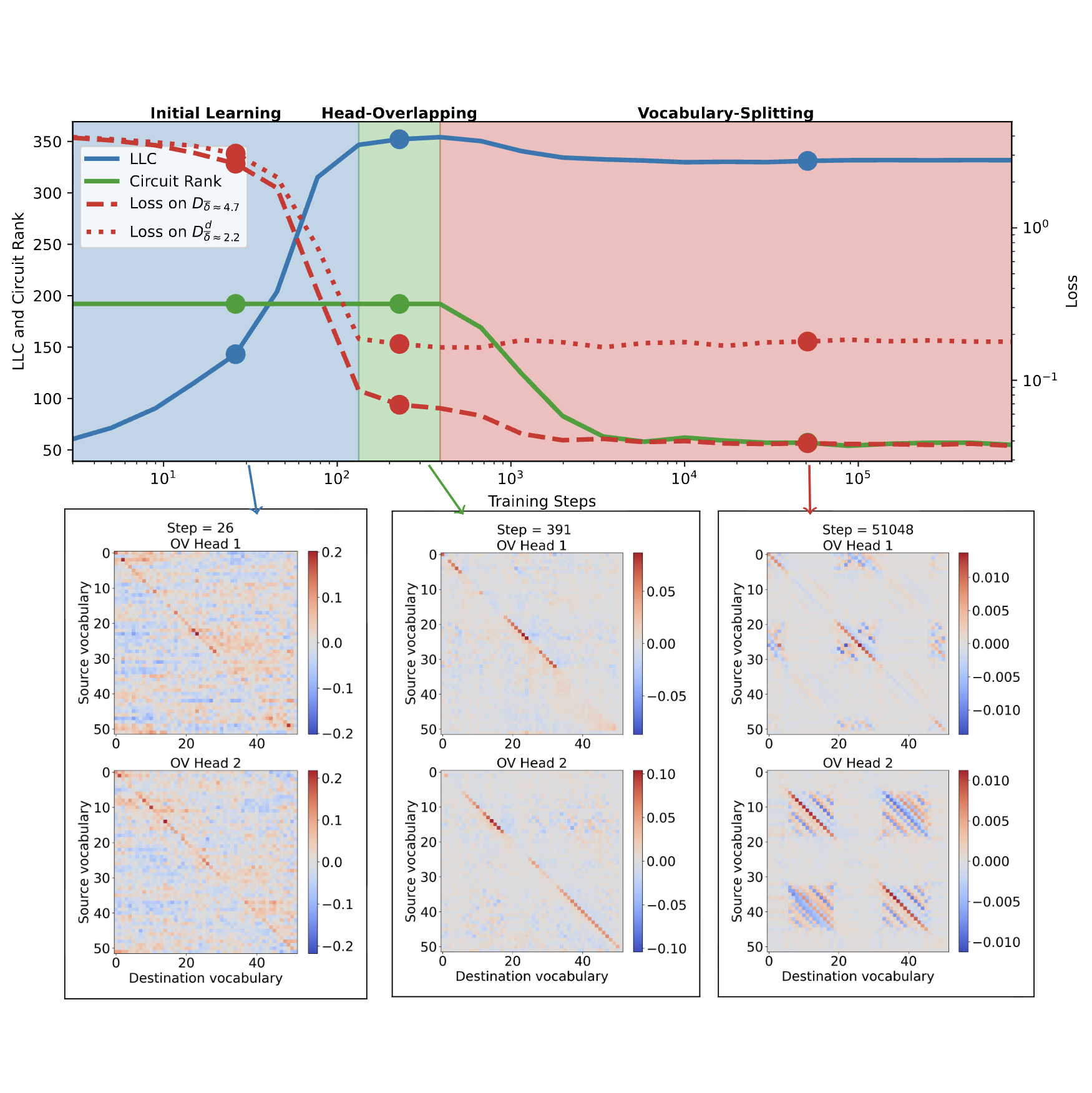

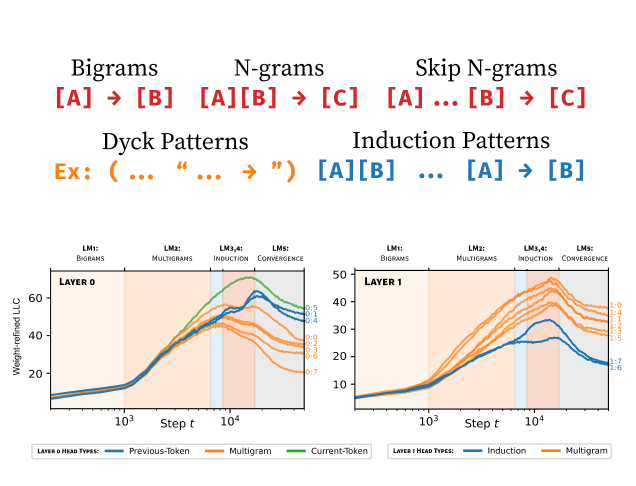

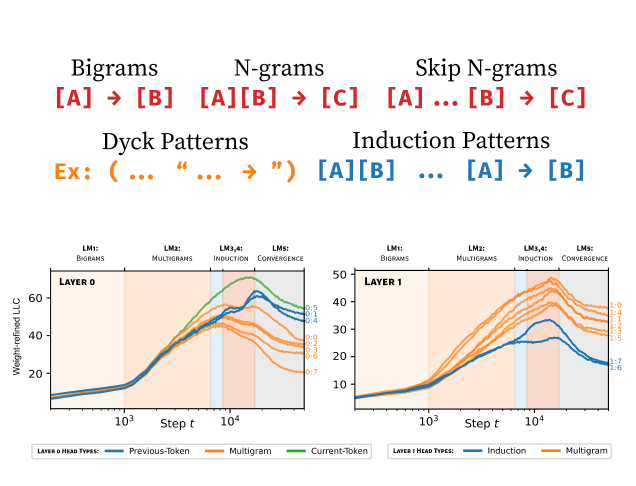

Differentiation and Specialization of Attention Heads via the Refined Local Learning Coefficient

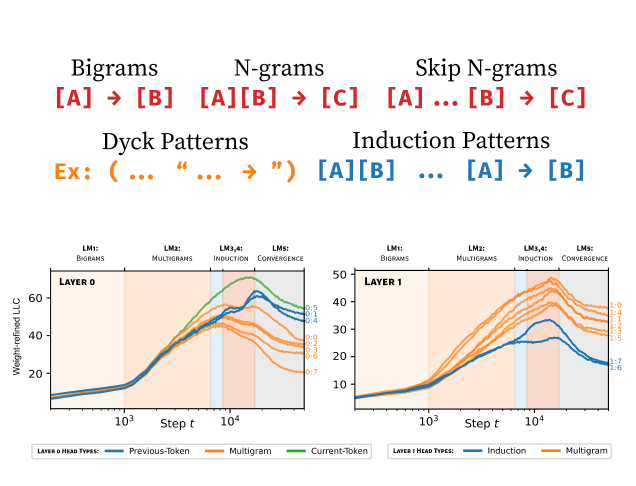

October 4, 2024 | Wang et al. | ICLR | Spotlight

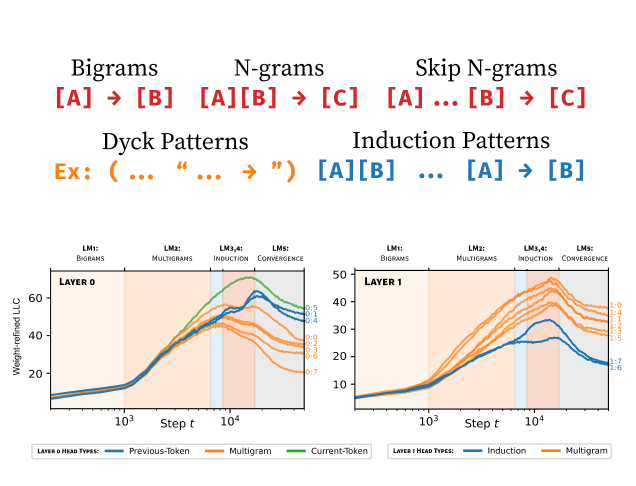

We introduce refined variants of the Local Learning Coefficient (LLC), a measure of model complexity...

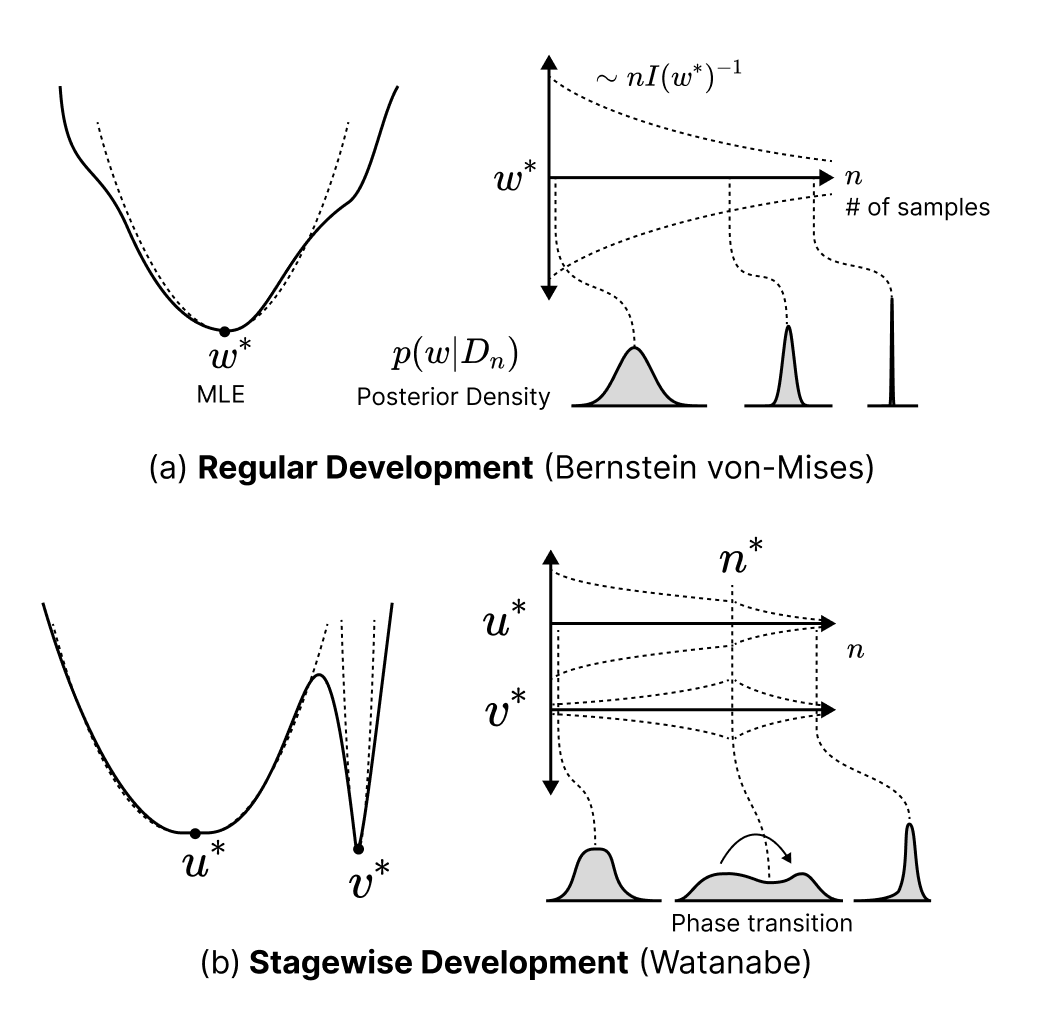

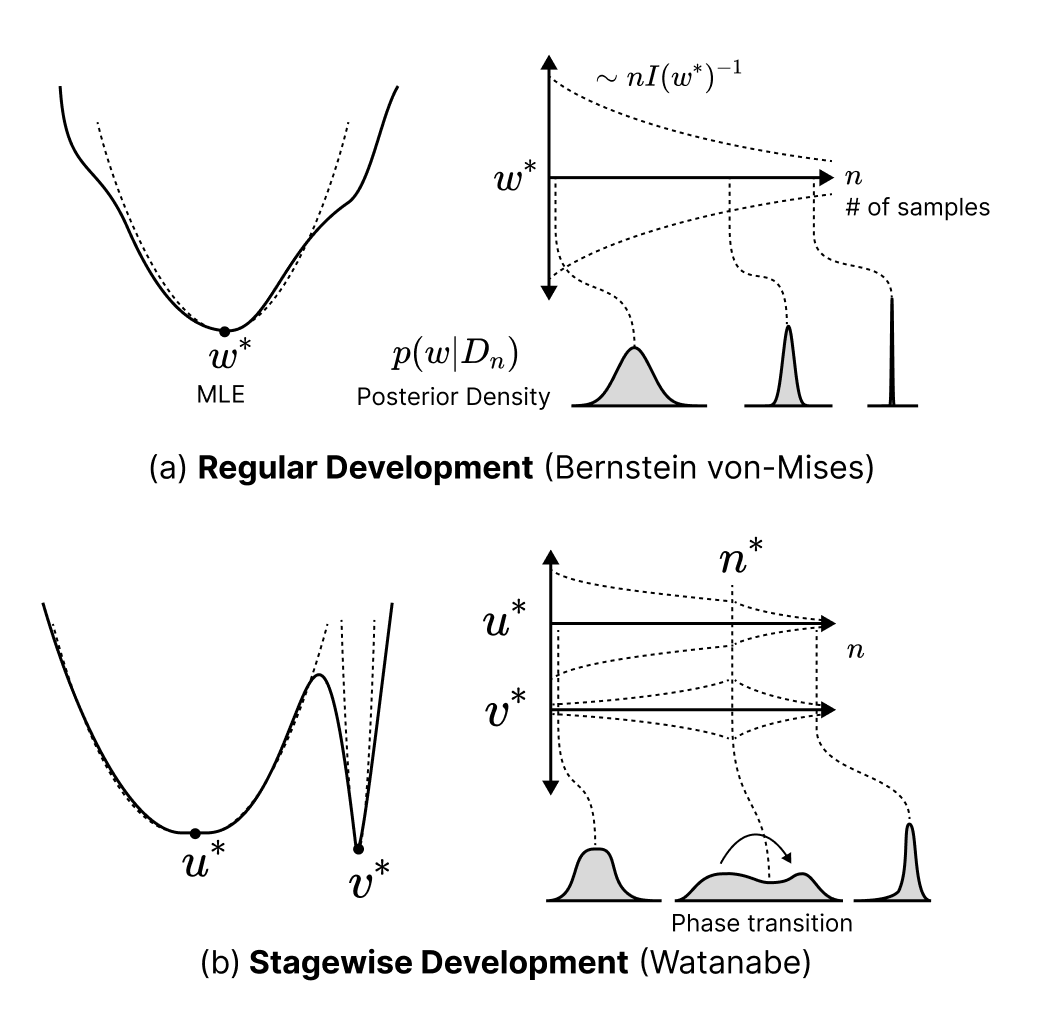

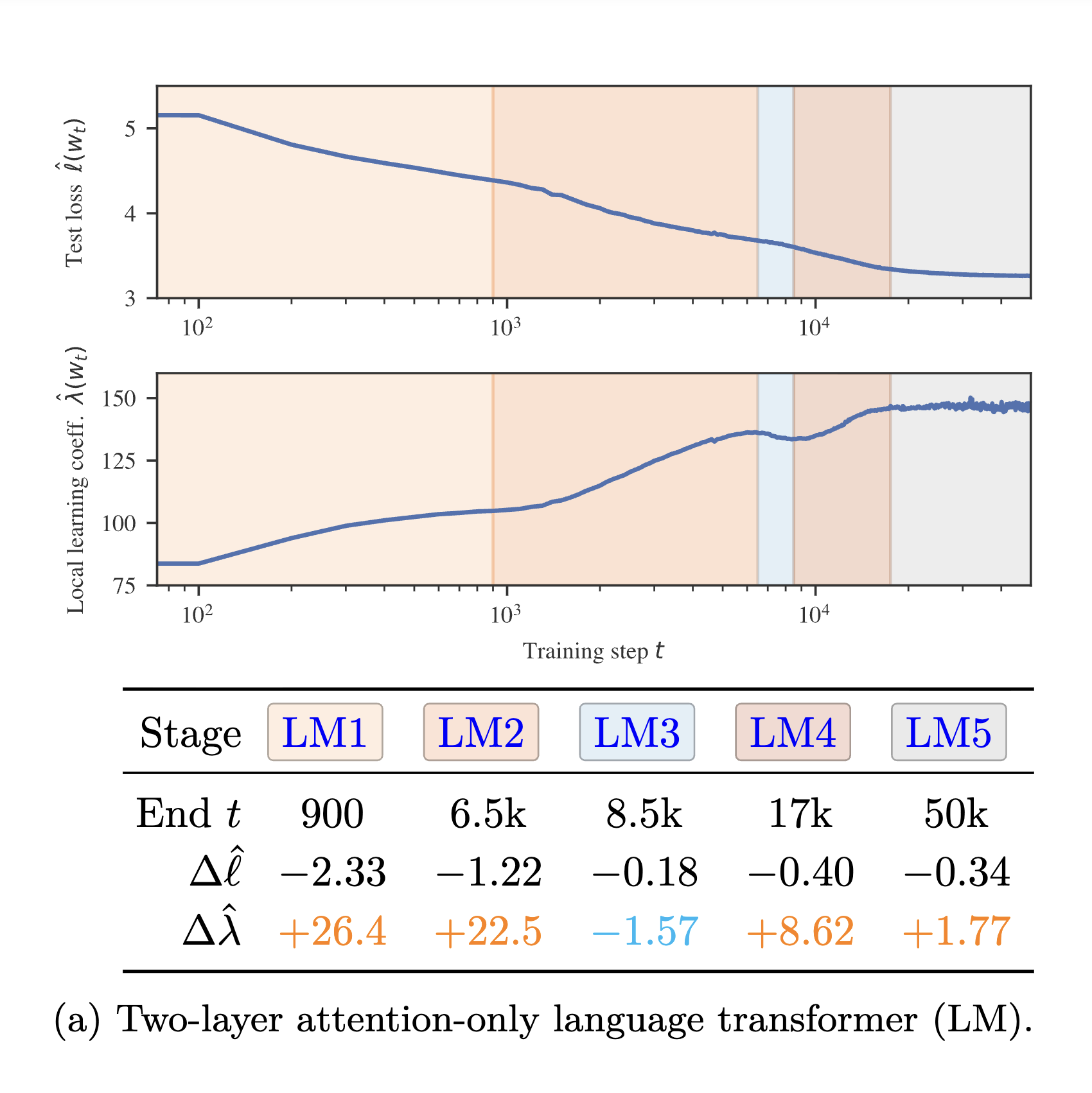

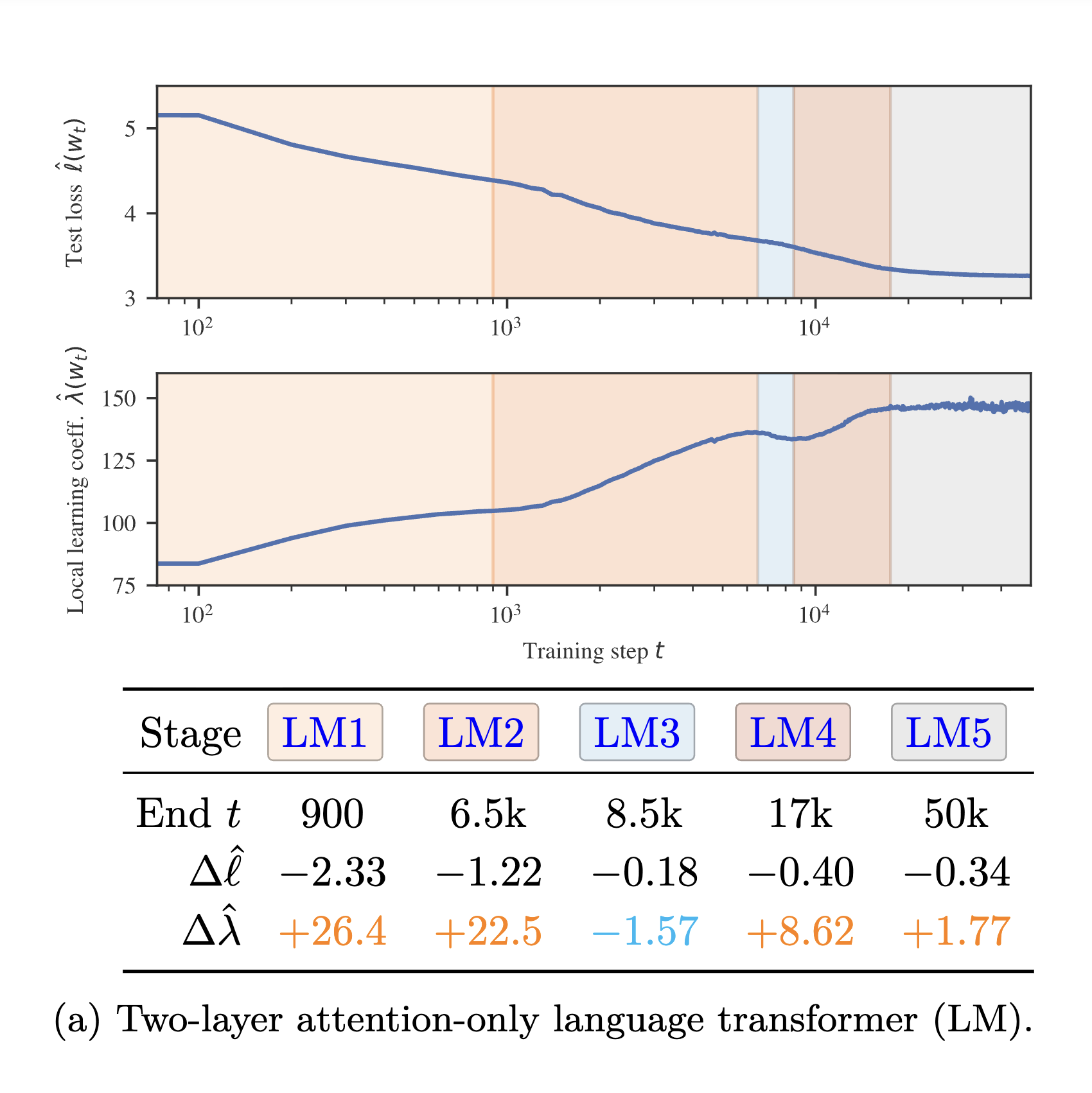

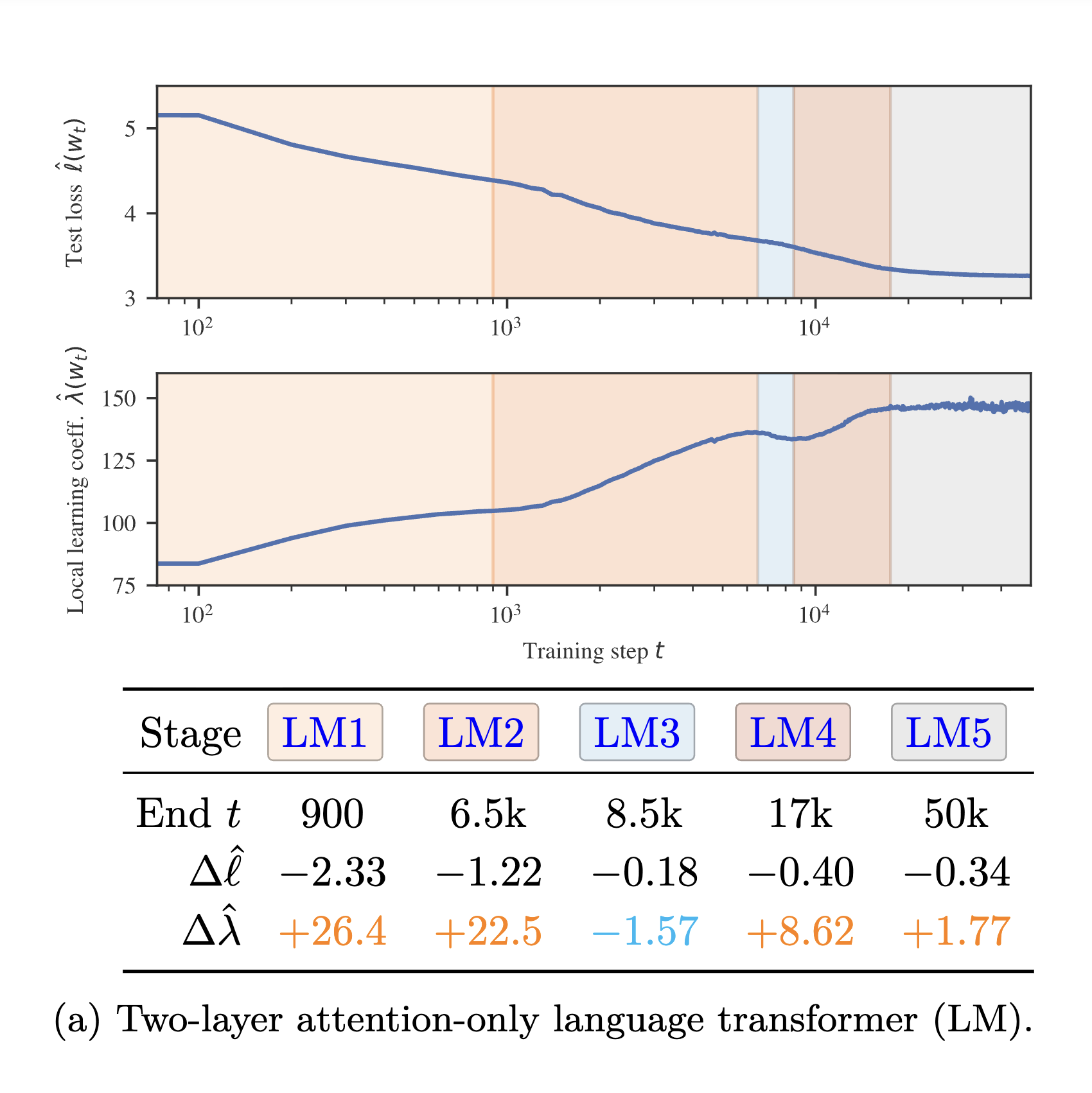

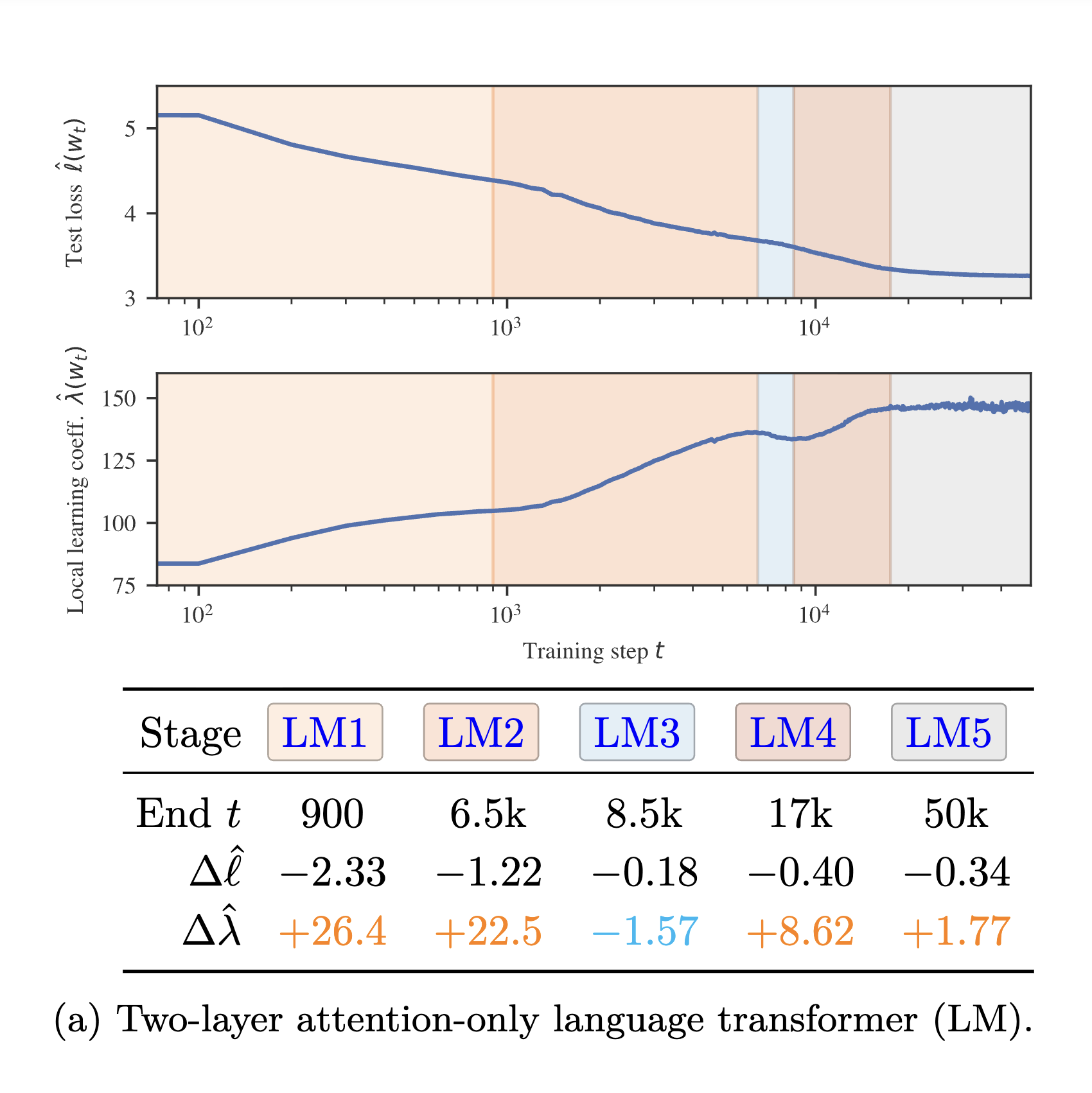

Loss landscape geometry reveals stagewise development of transformers

June 16, 2024 | Wang et al. | ICML HiLD Workshop | Best Paper

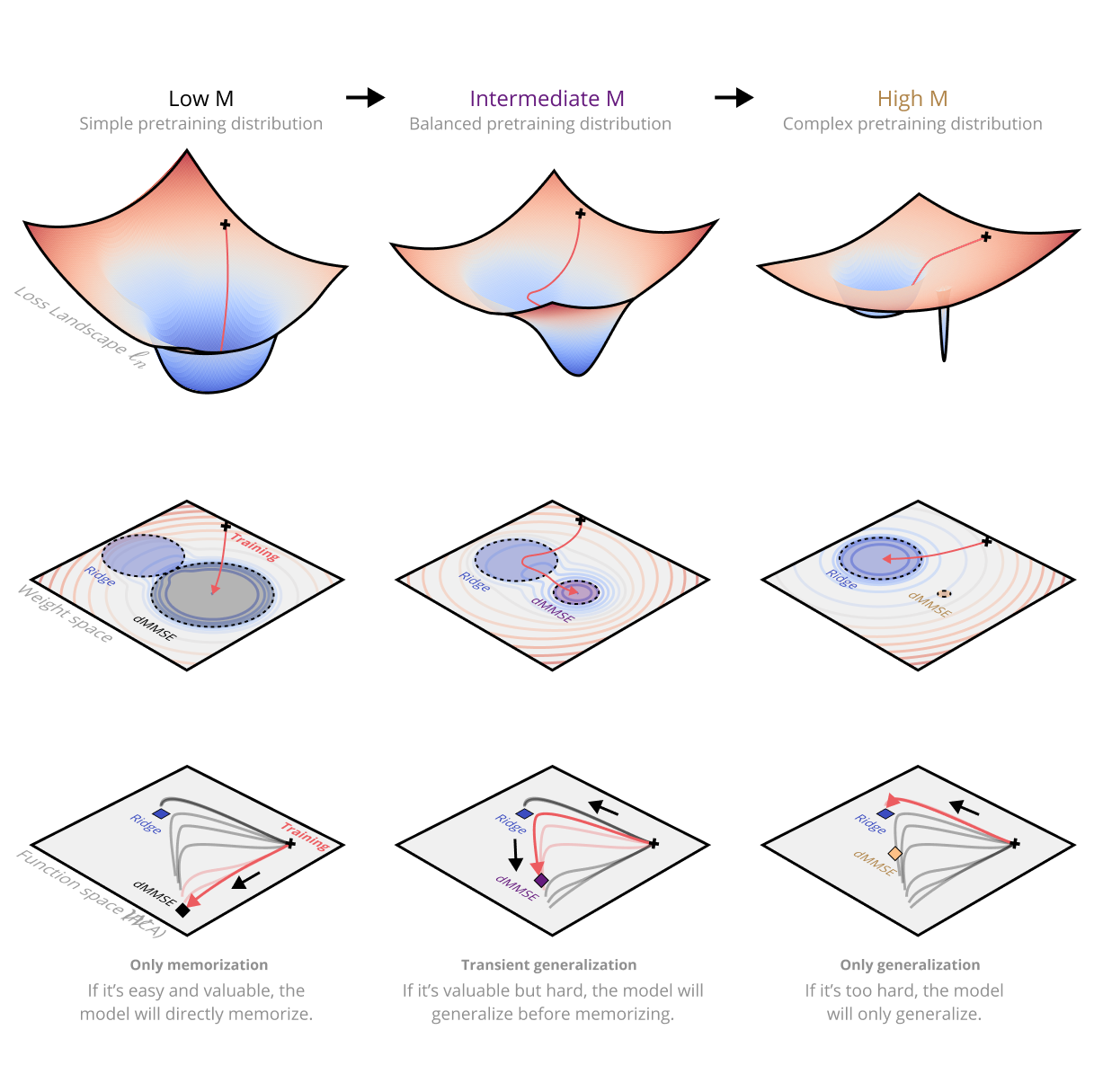

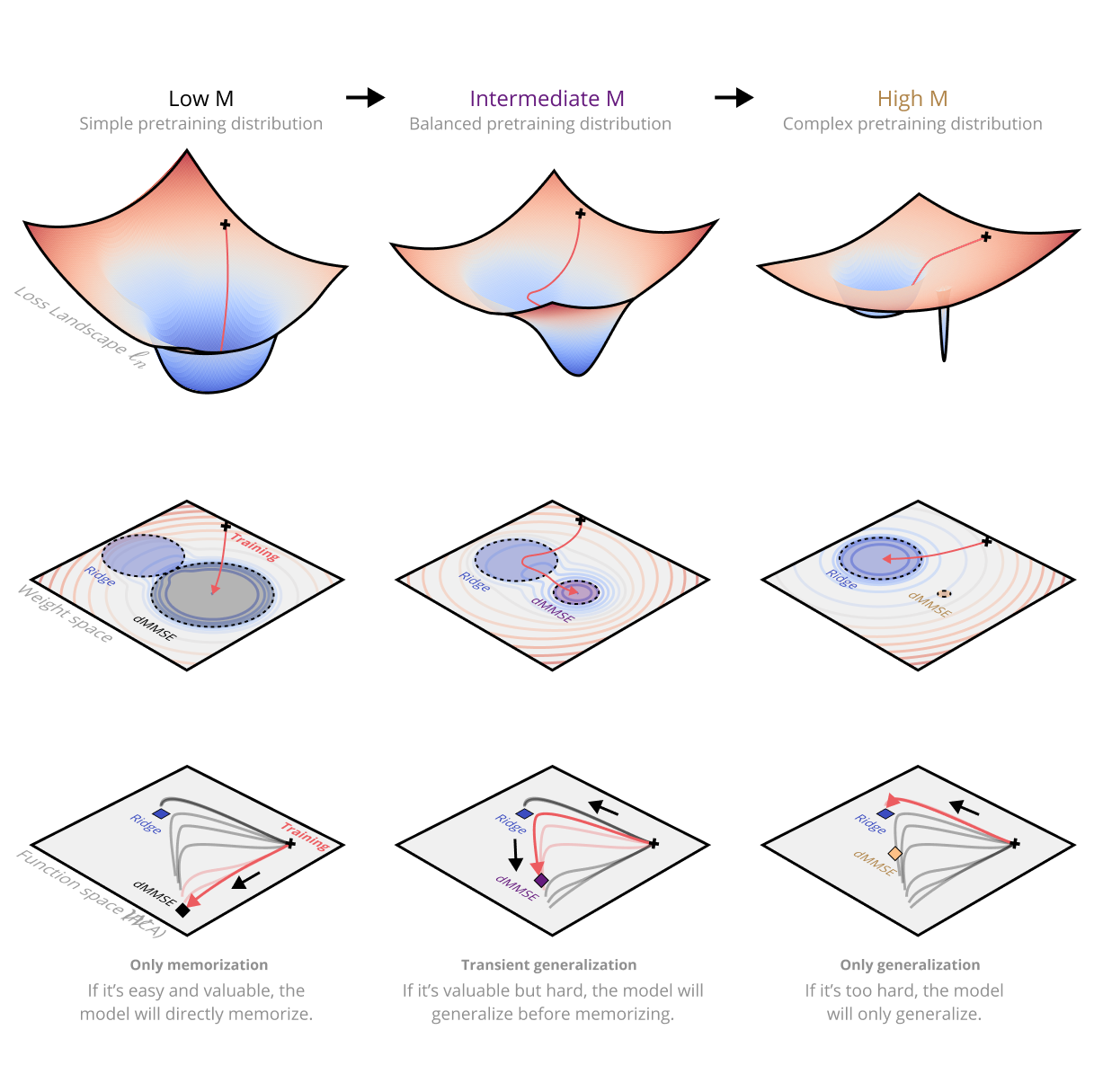

The development of the internal structure of neural networks throughout training occurs in tandem wi...

Loss Landscape Degeneracy and Stagewise Development of Transformers

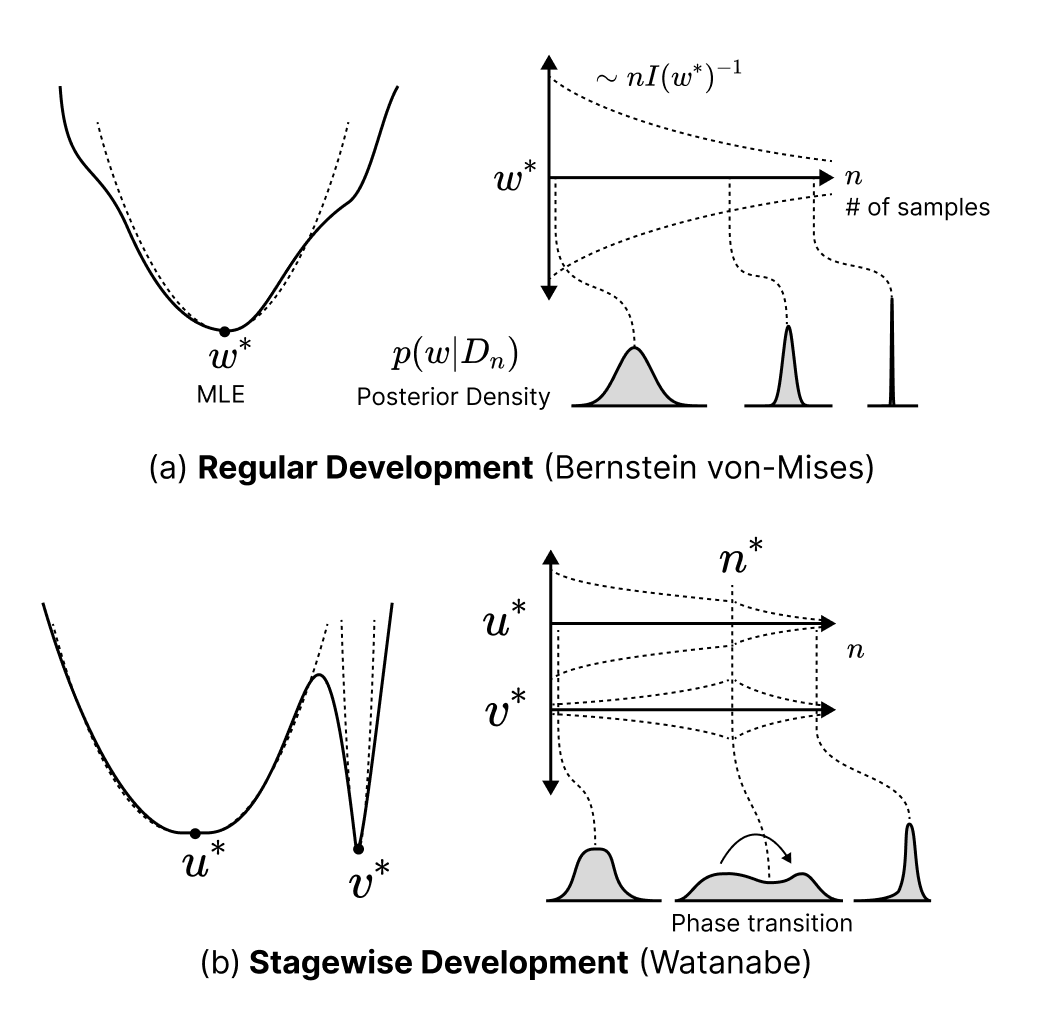

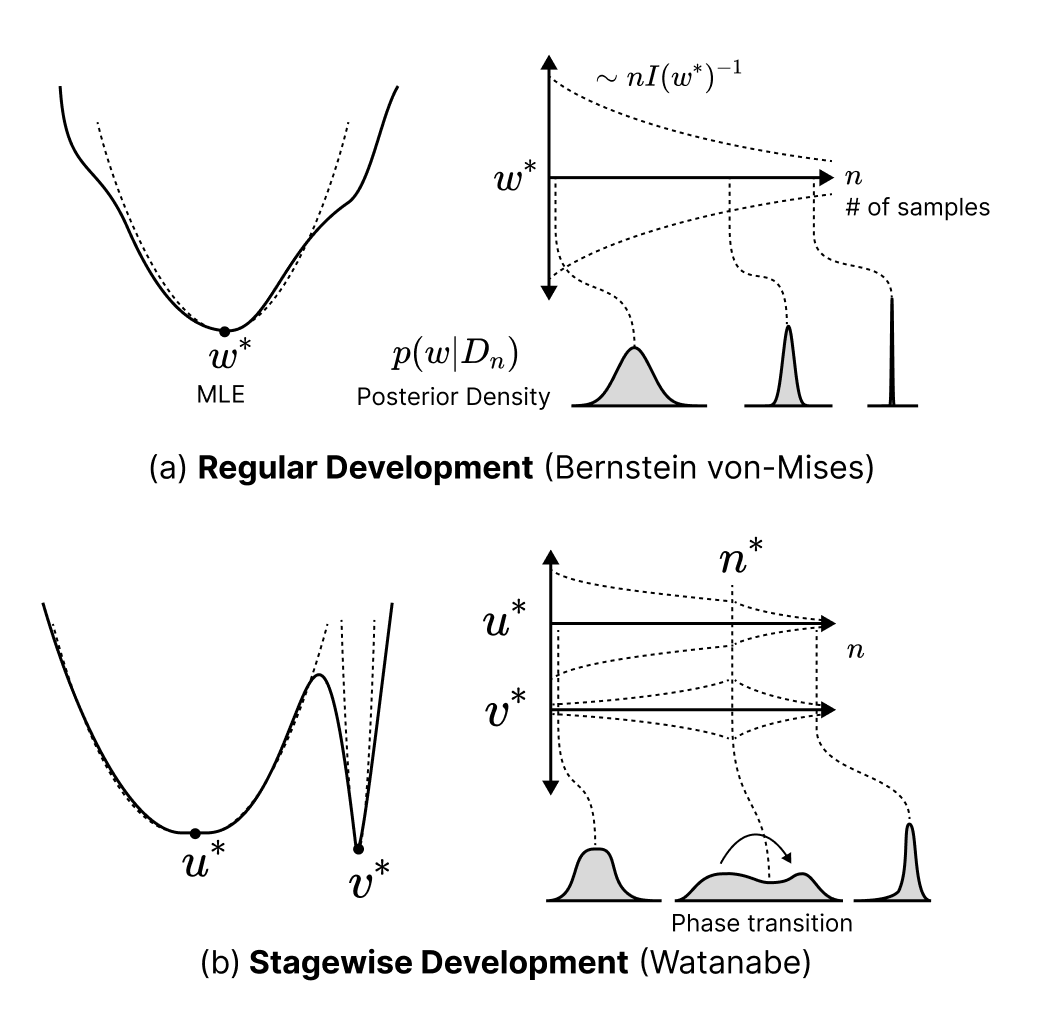

February 4, 2024 | Hoogland et al. | TMLR | Best Paper at 2024 ICML HiLD Workshop

We show that in-context learning emerges in transformers in discrete developmental stages, when they...

Dynamical versus Bayesian Phase Transitions in a Toy Model of Superposition

October 10, 2023 | Chen et al.

We investigate phase transitions in a Toy Model of Superposition (TMS) using Singular Learning Theor...

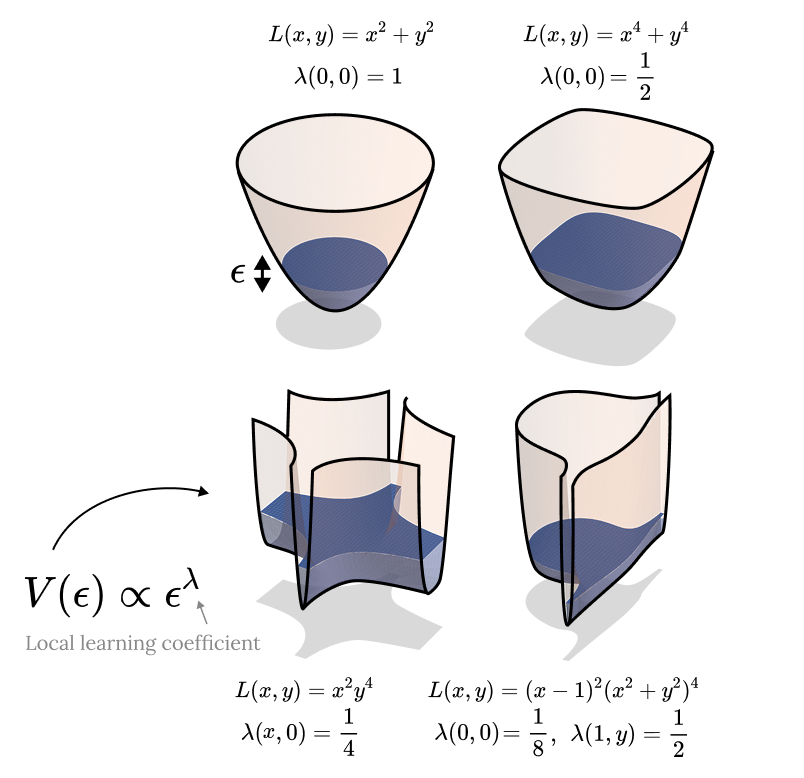

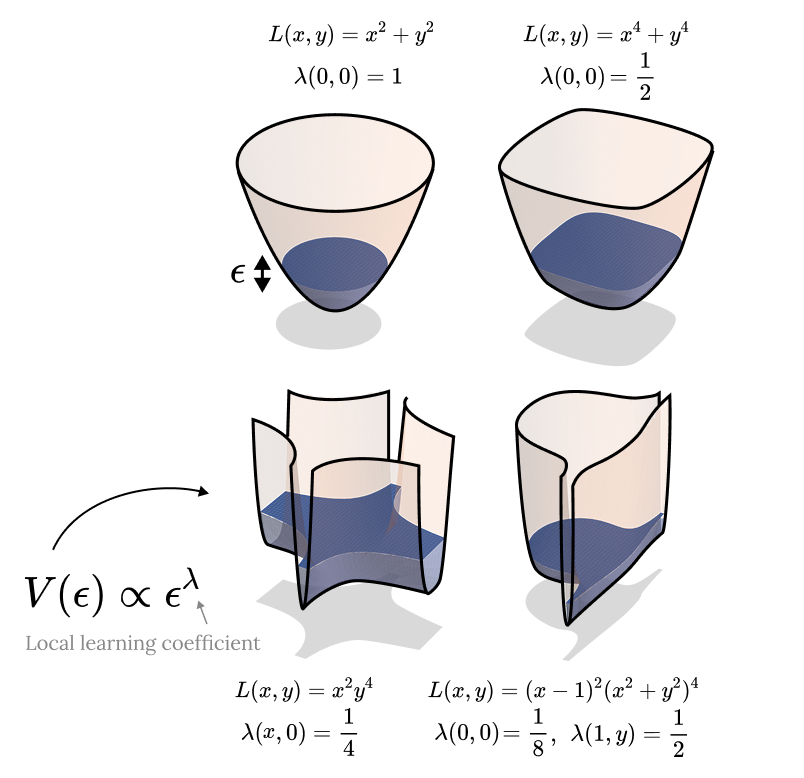

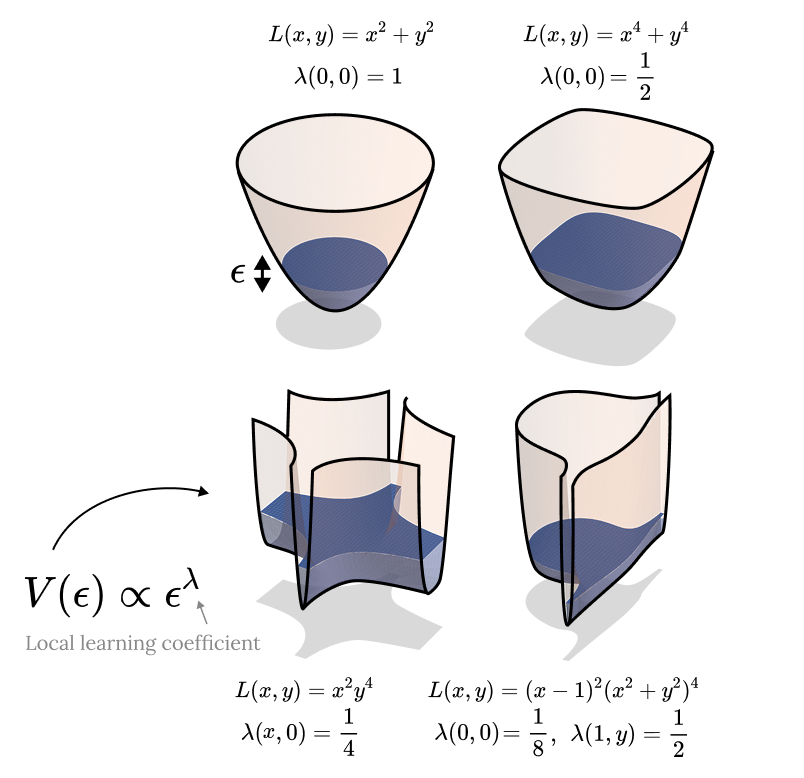

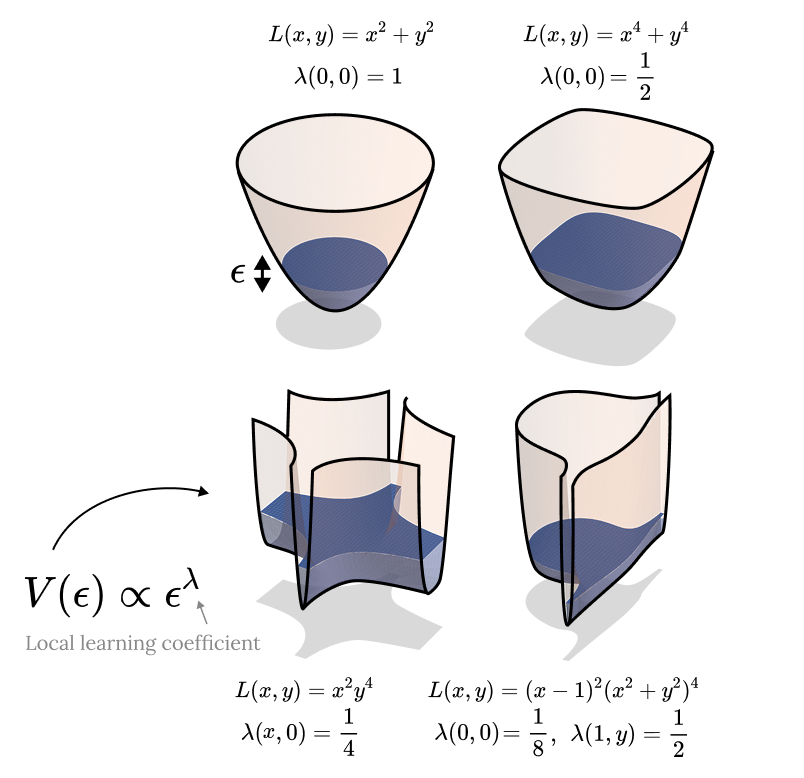

The Local Learning Coefficient: A Singularity-Aware Complexity Measure

August 23, 2023 | Lau et al. | AISTATS 2025

Deep neural networks (DNN) are singular statistical models which exhibit complex degeneracies.

October 2025

Influence Dynamics and Stagewise Data Attribution

October 14, 2025 | Lee et al.

Current training data attribution (TDA) methods treat the influence one sample has on another as sta...

Compressibility Measures Complexity: Minimum Description Length Meets Singular Learning Theory

October 14, 2025 | Urdshals et al.

We study neural network compressibility by using singular learning theory to extend the minimum desc...

September 2025

August 2025

Embryology of a Language Model

August 1, 2025 | Wang et al.

Understanding how language models develop their internal computational structure is a central proble...

July 2025

April 2025

Structural Inference: Interpreting Small Language Models with Susceptibilities

April 25, 2025 | Baker et al.

We develop a linear response framework for interpretability that treats a neural network as a Bayesi...

Programs as Singularities

April 10, 2025 | Murfet and Troiani

We develop a correspondence between the structure of Turing machines and the structure of singularit...

February 2025

You Are What You Eat – AI Alignment Requires Understanding How Data Shapes Structure and Generalisation

February 8, 2025 | Lehalleur et al.

In this position paper, we argue that understanding the relation between structure in the data distr...

January 2025

Dynamics of Transient Structure in In-Context Linear Regression Transformers

January 29, 2025 | Carroll et al.

Modern deep neural networks display striking examples of rich internal computational structure.

October 2024

Differentiation and Specialization of Attention Heads via the Refined Local Learning Coefficient

October 4, 2024 | Wang et al. | ICLR | Spotlight

We introduce refined variants of the Local Learning Coefficient (LLC), a measure of model complexity...

June 2024

Loss landscape geometry reveals stagewise development of transformers

June 16, 2024 | Wang et al. | ICML HiLD Workshop | Best Paper

The development of the internal structure of neural networks throughout training occurs in tandem wi...

February 2024

Loss Landscape Degeneracy and Stagewise Development of Transformers

February 4, 2024 | Hoogland et al. | TMLR | Best Paper at 2024 ICML HiLD Workshop

We show that in-context learning emerges in transformers in discrete developmental stages, when they...

October 2023

Dynamical versus Bayesian Phase Transitions in a Toy Model of Superposition

October 10, 2023 | Chen et al.

We investigate phase transitions in a Toy Model of Superposition (TMS) using Singular Learning Theor...

August 2023

The Local Learning Coefficient: A Singularity-Aware Complexity Measure

August 23, 2023 | Lau et al. | AISTATS 2025

Deep neural networks (DNN) are singular statistical models which exhibit complex degeneracies.